Editor’s Note: Welcome to the Leadership In Test series from software testing guru & consultant Paul Gerrard. The series is designed to help testers with a few years of experience—especially those on agile teams—excel in their test lead and management roles.

In the previous article we looked at the tools you’ll need for effective testing and collaboration. In this article, we’re discussing the final phase of testing and how to confirm that your systems will deliver as planned.

Sign up to The QA Lead newsletter to get notified when new parts of the series go live. These posts are extracts from Paul’s Leadership In Test course which we highly recommend to get a deeper dive on this and other topics. If you do, use our exclusive coupon code QALEADOFFER to score $60 off the full course price!

We’ve come to the final couple of articles in the Leadership In Test series. So far we’ve covered how to plan and manage a test project from start to finish and many of the tools and processes involved.

For this article, I want to discuss Enterprise Business Process Assurance (EBPA) — what it is and how to approach it. We’ll be covering:

- What Is Enterprise Business Process Assurance?

- Understanding Systems Integration and Testing

- Horizontal Testing: The E2E Testing Approach

- Integration Risks and E2E Testing

There’s a lot to cover, so let’s crack on.

What Is Enterprise Business Process Assurance?

Every organisation implementing new systems has experienced problems when using them for the first time. Systems that even slightly mismatch existing infrastructure and business processes can cause havoc and be difficult to repair.

Iterative, agile, and more collaborative practices help, but the challenges of enterprise-wide integration can only be addressed by a final stage of testing.

We call this final phase Enterprise Business Process Assurance (EBPA). Success depends on one or more phases of testing that will demonstrate that the systems, business processes, and data are integrated and deliver the services promised.

There are few scholarly articles on the topic, even though the latter stages of every large-scale project are dominated by this activity. To inform our EBPA strategy, we’ll first take a closer look at the types of systems integration and testing you’ll be dealing with.

For enterprise-level projects, the complexity scales up exponentially. That's why choosing an enterprise-grade database management software can be a game-changer in your quality engineering efforts.

Understanding Systems Integration and Testing

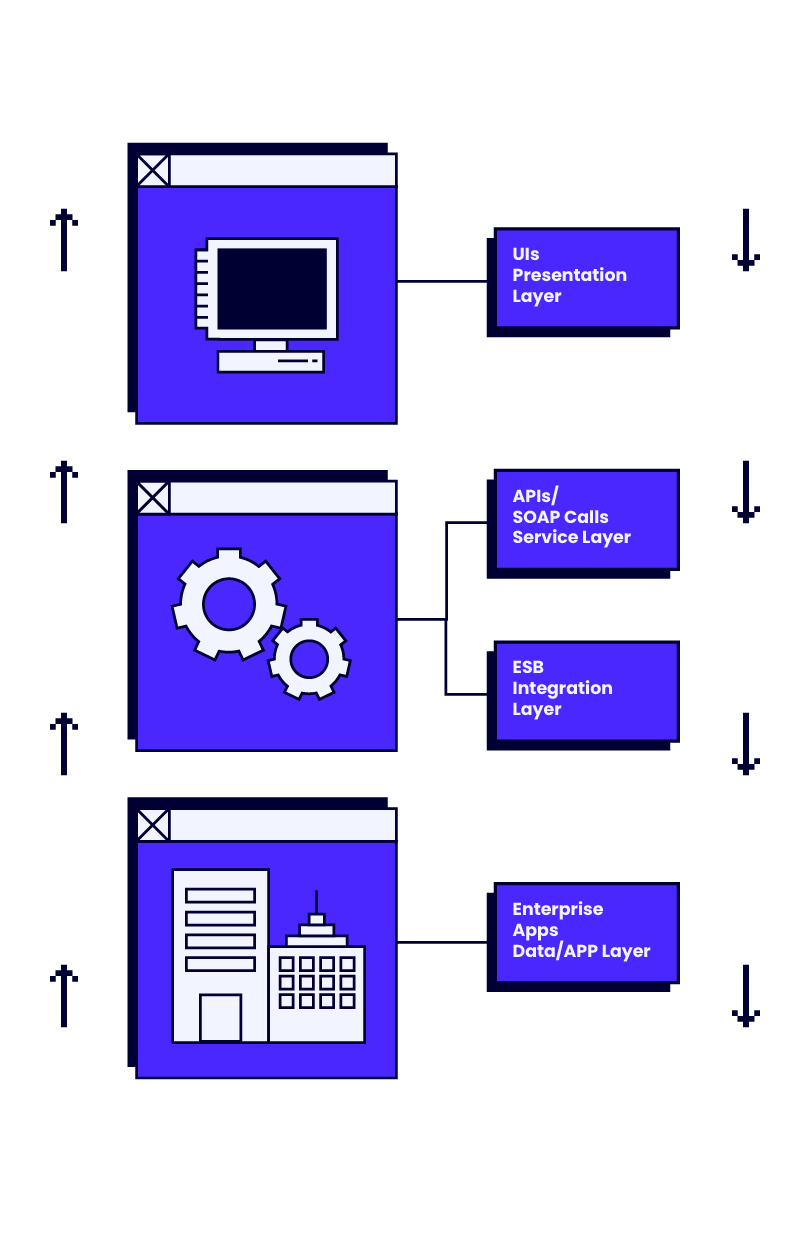

Vertical Integration

From a technical perspective, integration represents the connection between the various layers of technical architecture. Integration testing for developers mostly consists of checks that data accepted through the user interface in an update transaction is successfully stored in the database.

In the other direction, the data held is checked so that it can be accurately presented in the user interface when requested. The connections and paths through the technical architecture can be viewed as vertical paths or vertical integration tests.

Horizontal integration

End users don’t see the technical architecture and all its complexity. They view the system as a series of features, exercised by different users in what might be called a user journey.

These journeys trace paths in the users’ business processes and access different systems and features at each step in the path using the user interface.

Agile teams work on smaller-scale stories and do not see the larger epic. Developers usually cannot trace and test longer user journeys anyway because they don’t have the environments or data. So, organizations usually rely on larger-scale tests and teams of testers to validate them.

User journeys naturally cover the business process and the system features in combination. In this way, horizontal tests exercise the integration between the systems and the business process.

Vertical integration testing adopts a more technical perspective; horizontal testing adopts a user or business process perspective.

Now let’s use these concepts to construct a model that will help us to understand and plan for EBPA. We’ll reference the horizontal and vertical integration approaches on the model.

A Model for Integration and Testing

Integration is an often misunderstood concept. The integration process actually starts almost as soon as coding begins. You could say that integration commences when we have two lines of code — the second line of code must be integrated with the first. Integration ends when all functional testing ends at user acceptance.

Let’s focus on an example using commercial-off-the-shelf (COTS) and enterprise resource planning (ERP) modules.

The software company creating COTS or ERP modules has done unit and functional testing. When these components arrive they can usually be assumed to work and to integrate technically. But there is always a risk that integrated components from different suppliers interact in unforeseen ways.

Also, if components are customized, their behaviour will change and again this is likely to cause subtle (and not so subtle) side-effects elsewhere. There is still a need for vertical integration to verify the exchange of control and data in the technology stack, and horizontal integration across components and the business process.

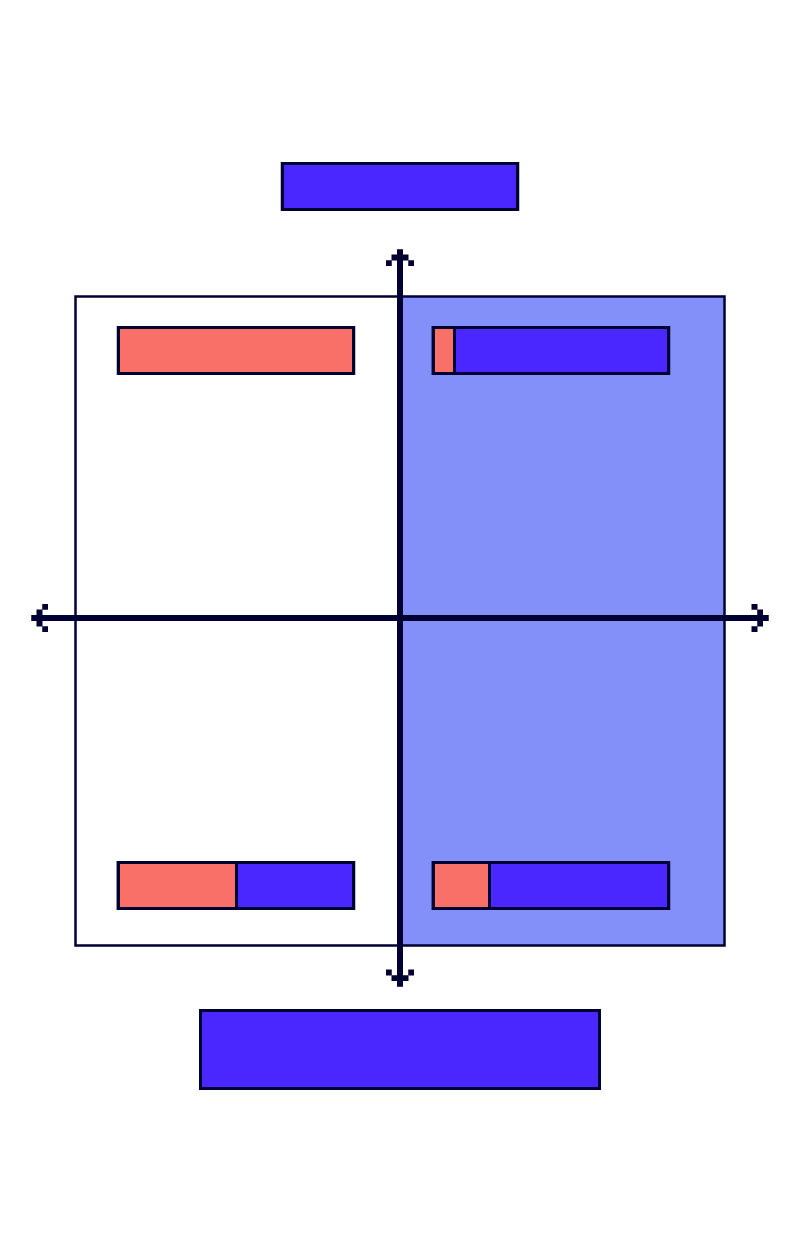

We represent the four integration activities in a four quadrant model with two axes. On the X-axis we have the scale of the system under test – either a single component or sub-system in isolation or the integrated systems in the context of the business process.

The right-hand side of the model is shaded blue and represents EBPA. Testing our system in the context of other systems (SIT) and the business process (BIT) is what we term Enterprise Business Process Assurance.

Let’s look at the four quadrants in turn.

Module, Feature or Component Testing

The module must be functionally tested whether it is custom-built or is a COTS component. The user experience is always important and must integrate with some step or activity in the business process.

Sub-System Integration Testing

Integration is incremental. As low-level components and features become available, they are integrated into the increasingly large system and tested until the complete, coherent system is ready. We call this sub-system integration testing.

System Integration Testing (SIT)

No system exists in isolation, so when our system is complete, we need to integrate that system with other systems. We install our system in an environment with its interfacing systems and test these systems working as a ‘system of systems’ across these interfaces. Our goal in this stage is to address the technical risks of integration and we call this system integration testing.

Business Integration Testing (BIT)

There is a final stage of integration that aims to ensure that the as-built systems integrate with the business processes and (often manual) procedures of the users of those systems. Including the system’s external interfaces (if not tested already), we validate that the systems and business processes, working in collaboration, provide coherent and efficient user journeys and that they manage and transfer data correctly and consistently. We normally call this business integration testing.

For the remainder of this article, we’ll focus a lot on the horizontal, EBPA side of the model.

Related Read: 10 BEST TEST DATA MANAGEMENT TOOLS

Horizontal Testing: The E2E Testing Approach

Horizontal integration is most reliant on what is often called End-to-End (E2E) testing.

E2E testing is a test design technique that invokes a series of connected transactions, typically spanning multiple systems including our system(s) under test. These tests tend to follow user journeys through their business processes.

Given a model of a business process, paths are traced through the process to create a useful series of transactions that simulate the way users will use the system in production.

These models can be familiar diagrams such as flowcharts or swim-lane diagrams, or they can be more technical representations such as UML sequence or collaboration diagrams. (UML is the Unified Modelling Language used by many IT organisations).

E2E tests address specific risks that cannot easily be addressed by earlier testing on sub-systems or environments that depend heavily on stubbed-out interfaces and purely synthetic data.

In the realm of Horizontal Testing, the E2E Testing Approach is often complemented by specialized software. For a curated list of software that can streamline this process, check out these top-rated test management solutions

Acceptance

The notion of ‘fit’ is appropriate for component-component integration, system-system integration, and systems-business process integration.

Acceptance is normally based on the results of horizontal E2E tests because business stakeholders can trust that these tests show how the new system fits and supports their ways of working. Horizontal E2E tests give them confidence that the delivered service will work.

The acceptance process for systems usually depends on successful horizontal E2E testing, at least in part. This is because only E2E tests exercise the system in a realistic environment and simulate realistic user activity.

In many organizations, it is only possible in the final stages of testing to demonstrate systems working and this gives confidence to stakeholders that the system will work as required.

If you are asked to plan an acceptance test, we expect that the E2E test technique will play a large part in your planning.

Integration Risks and E2E Testing

As discussed, integration risks relate to system-system integration or system-business process integration.

Some organisations choose to split the tests that address these two risk types into System Integration Testing (SIT) and Business Integration Testing (BIT). But often the risks and tests are merged into a single test stage labelled E2E, Acceptance, or Business Testing.

However the testing is structured, it makes extensive use of the E2E test approach explained above. In your environment, the risks you face may be variations on these themes and you may need to adjust the test objective and your test approach accordingly.

The list of risks presented here should be regarded as a starting point only and to remind you where you should be looking to focus your testing. It is likely there are risks specific to your organisation, industry, or technology that you’ll need to add to this starting list.

In the table below, ‘systems’ refer to the collection of integrated systems comprising the new system or systems under development, other legacy or infrastructure systems, and external systems e.g. banks or partnering organizations.

| Risk | Test Objective | Test Approach |

| Systems are not integrated (data transfer). | Demonstrate that systems are integrated and perform data transfer correctly. | System Integration Test (SIT) |

| Systems are not integrated (transfer of control). | Demonstrate that systems are integrated (transfer of control with required parameters is done correctly). | SIT |

| Interfaces fail when used for an extended period. | Demonstrate that interfaces can be continuously used for an extended period. | SIT (These checks might also be performed as part of Reliability and/or Failover Testing) |

| Systems are not integrated (data does not reconcile across interfaces). | Demonstrate that systems are integrated (data transferred across interface is consistently used e.g. currency, language, metrics, timings, accuracy, tolerances). | SIT |

| Systems are not synchronized (data transfers not triggered, triggered at the wrong time, or multiple times). | Demonstrate that data transfers are triggered correctly. | SIT |

| Objects or entities that exist in multiple systems do not reconcile across systems. | Demonstrate that the states of business objects are accurately represented across the systems that hold data on the objects. | Business Integration Test (BIT) |

| Systems are not integrated with the business (supply chain) process. | Demonstrate that the systems integrate with business processes and support the supply chain process. | BIT |

| Back-end business processes do not support the Web or mobile front-ends. | Demonstrate that the supply-chain processes are workable and support the business objective. | BIT |

| Integrated systems used by the same staff have inconsistent user interfaces or behavior for similar or related tasks. | Demonstrate that users experience consistent behavior across systems whilst performing similar or related tasks. | BIT (These checks might also be performed during UX checking) |

Notes on the risk table

Some of the risks above need a little more explanation.

Data Transfer Problems

Often, data is transferred between systems across networks. If these transfers are run as batch processes and fail, or fail as a real-time transfer triggered by a user, business, or other system event, then data will be missing in the target system.

Faulty Transfer of Control

A transfer of control is where a user activity or system process transfers the user or process to another feature of the system.

Typically there is a choice of target feature and, depending on the user action, or data content of a transaction, a choice of a different route through the application is made.

From the perspective of the user, they are taken to the wrong place in the application or a system process expects to operate the wrong process and loses synchronisation.

Data Does Not Reconcile

A system might distribute data relating to, for example, money, or asset location, or some physical quantity that must ‘add up’ across all the systems.

For example, where 100 items of stock are purchased and moved, sold, used in manufacturing processes, deemed as faulty and returned or scrapped, the number of items held in each subsystem must reconcile with the original count of 100.

A variation on this problem is, for example, the units used in the systems holding data. The batch sizes or aggregations of counted must be accounted for, or systems must match metric and imperial measures, and so on.

Systems Not Synchronised

Related to the data transfer problem above, the system processes that perform transfers are scheduled to run in the right sequence, triggered by appropriately authorised processes or people, and are triggered in a timely manner and by the appropriate event or conflation of events.

Some batch processes must be run hourly, daily, weekly, at the end of the month, quarter or year and so on and need to be checked. Much functional behaviour depends on the relative timings of transactions or the age of data across systems and needs to be checked for too.

Objects Don’t Reconcile

Rather than counts of objects, money, or physical measurements, these risks relate to the status of objects that are held in distributed systems. For example, a personnel record employee status should be consistent across systems that hold copies of that data object. The processes that distribute changes of state across systems holding copies of the same object need to be triggered and their actions checked.

Systems Not Integrated With Business

The behaviour of systems must synchronise with the intents of users. Typical problems are where the system presents information that is incomplete, out of date, or wrong. The underlying cause of this is where data transfers or synchronisations are not working correctly, but these problems manifest themselves through the user interface.

Back-end Processes Don’t Support Front-End

This kind of problem manifests itself as an inconsistency between systems of record and systems of engagement. Back-end batch processes that are not run frequently enough, or at all, do not make data available to front-end systems of engagement. In the same way, user transactions through systems of engagement are not reflected in systems of record.

Inconsistent User Interfaces

Where a business or service is offered across mobile, web, and kiosk-based interfaces, the user interface behaves differently or inconsistently. They may all have been tested independently, but their behaviour is different. For example, different input validation rules are applied, different data fields are captured/displayed, or the sequence of inputs is different.

Final Thoughts

We have presented a model of integration and testing that gives prominence to enterprise-wide integration and the business process. The E2E approach is the only one that requires complete system integration and bases tests on complete user journeys. In this way, stakeholders can see evidence of systems working correctly in a realistic context.

Many organisations rely on users to apply a form of E2E testing in their acceptance tests and to run those tests manually. From experience, I advocate a more systematic, risk-based approach that makes it easier to automate much of the labour-intensive test execution.

As organisations adopt agile and more dynamic continuous delivery development approaches, giving agile teams more responsibility for testing their sub-systems, it is easy to neglect later enterprise-wide integration and acceptance testing.

The EBPA approach, especially if automated, extends the continuous integration regime from sub-system to enterprise business process assurance.

COTS and ERP packages allow hugely capable but complex systems to be implemented with less development effort, but the risks of integration are just as prominent as for custom software developments.

In larger environments, agile and continuous delivery approaches are increasingly popular, so as complexity and scale increase so does the frequency of delivery and associated risk.

The solution is clear: organisations must take EBPA seriously. They need to understand integration risks and how to structure testing to address them. Testers must move towards the modern model-based approach and abstract their business processes, their systems, and tests, and implement test automation systematically.

Sign up to The QA Lead newsletter to get notified when new parts of the series go live. These posts are extracts from Paul’s Leadership In Test course which we highly recommend to get a deeper dive on this and other topics. If you do, use our exclusive coupon code QALEADOFFER to score $60 off the full course price!

Suggested Read: 10 BEST OPEN SOURCE TEST MANAGEMENT TOOLS