Businesses rely on seamlessly integrating information from various sources. This is where ETL (Extract, Transform, Load) comes in, which is crucial in building a unified data foundation. However, the ETL process isn't without its hurdles.

This article addresses five common ETL challenges you might encounter and explores the best ETL tools that can help you overcome them, ensuring a smooth and efficient data integration journey.

5 Common ETL Challenges

While crucial for moving and integrating data from multiple sources, ETL processes carry numerous challenges that a developer must navigate.

Poor Quality Data

One of the biggest challenges in ETL processes is data integrity. Inconsistent data can lead to incorrect results that undermine the accuracy and reliability of data analysis. The ETL process requires data from various sources to be compatible and uniform for successful information integration, but variability in these sources in terms of format, structure, and values can delay or even derail the process. Data may have missing values, duplicate information, or even contradictory details. Solving these issues requires substantial time and effort to cleanse and standardize the data into one unified format.

Bottlenecks

Bottlenecks in data processing are another common ETL challenge. As data volumes grow, it becomes difficult for systems to process them in a timely manner. This can lead to slow data updates and render data obsolete before it is even used.

Performance Issues

A critical barrier when it comes to ETL processes, handling colossal volumes of data from various origins can be a strenuous task. Optimization of these procedures becomes crucial to ensure efficient extraction, transformation, and loading.

Furthermore, as businesses are becoming more data-driven, there is an exponential growth in real-time data. ETL processes must, therefore, cope with these big data workloads and timely refresh of the data without causing substantial performance overheads.

Complexity of ETL Scripts

Often, ETL scripts are manually written code, which makes maintenance and upgrades difficult. Any minor changes in the source or target data structure might require a complete overhaul of these scripts. Debugging these complex scripts is a formidable task that can consume a lot of development resources.

Data Privacy and Security

This is a major concern during ETL operations. As data is extracted from various sources and moved across systems, multiple points of vulnerability exist where data breaches might occur. This becomes further complicated as privacy regulations (GDPR and HIPAA) and compliance requirements governing data handling become increasingly stringent.

Despite these challenges, ETL remains an integral part of many business operations. With the right strategies and effective tools, these hurdles can be effectively managed, and the full potential of ETL processes can be realized.

Best Practices For Overcoming ETL Challenges

Overcoming ETL challenges calls for strategic solutions and adherence to best practices.

- Infrastructure should be scalable and flexible to address fluctuating data volumes. Cloud-based solutions can help manage storage and infrastructure concerns effectively and cost-efficiently.

- A well-defined data governance policy can simplify the data mapping and verification process. It is imperative to perform incremental rather than bulk loads to reduce the load time and mitigate the risk of data loss. Regular audits should be carried out to maintain the data quality and integrity. Implementing solid cybersecurity measures is paramount to safeguard sensitive data during the entire ETL process, ensuring no breaches or leaks.

- Utilize high-quality ETL tools that not only support diverse data types but also reduce coding requirements. These tools can manage the entire ETL process in a structured manner, thus minimizing errors.

ETL Testing Tools

ETL testing tools validate, verify, and qualify data while preventing duplication and data loss. These tools play a pivotal role in improving the efficiency, speed, and effectiveness of the ETL process. They are designed to guarantee that the data transfer from multiple sources to a data warehouse is accurate and follows consistent patterns.

Utilizing ETL testing tools can significantly reduce the manual input required in data testing, ultimately reducing the risk of human-made errors.

| Informatica Data Validation | QuerySurge | TestBench |

|---|---|---|

| This tool offers comprehensive ETL testing and data integration testing. It effortlessly identifies and addresses data discrepancies and anomalies, improving data integrity. Informatica Data Validation is known for its user-friendly graphical interface, which aids in creating, managing, and executing ETL test cases, requiring minimal coding knowledge. | QuerySurge is the market leader in full-cycle Big Data, ETL, and Data Warehouse testing. QuerySurge ensures that the data extracted from source files remains intact in the destination by analyzing and pinpointing any discrepancies in the huge data sets. It provides end-to-end testing, allowing data validation from source to target. The solution offers real-time analytics, which assists in quick decision-making based on accurate results. QuerySurge is best known for its ability to automate the ETL testing process, saving a significant amount of time and eliminating human error. | Consider the size and complexity of data that needs to be handled. Tools like TestBench address complexities and provide integrated testing processes, making them ideal for intricate data architectures or projects. Additionally, they can generate synthetic test data that does not breach privacy regulations, a feature immensely useful when working with sensitive information. |

Despite the advanced capabilities of modern ETL testing tools, challenges persist. Different customer needs require diversified testing tools. Scalability could become an issue if the amount of data increases exponentially. Hence, it is crucial to consider the scalability of the tool itself.

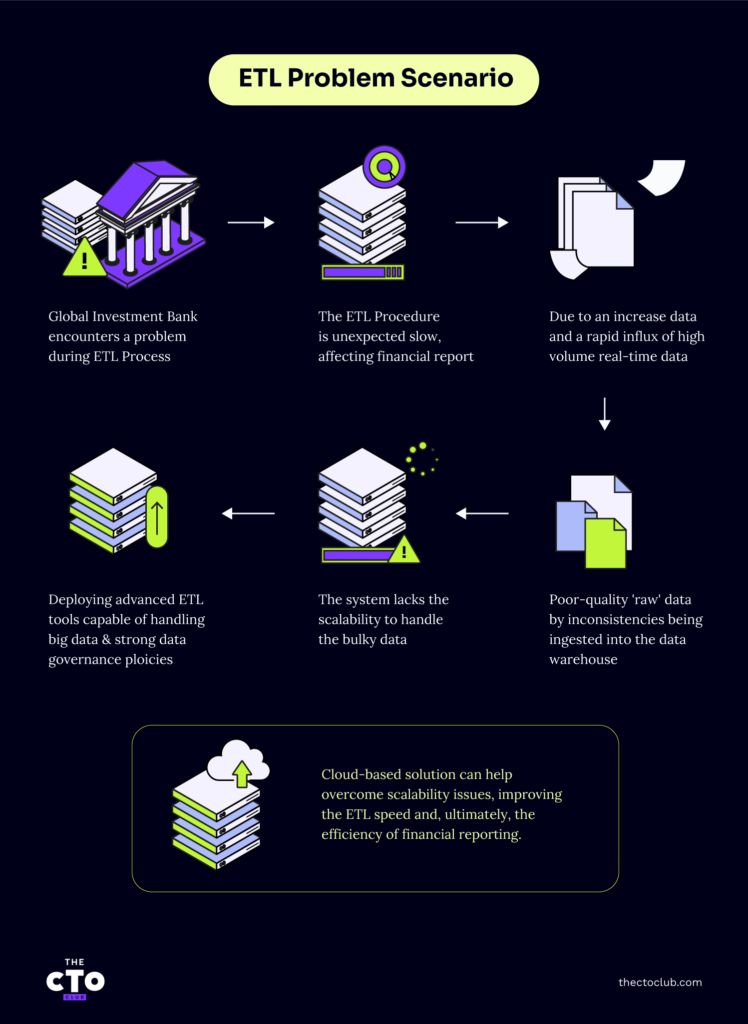

ETL Problem Scenario

Imagine a global investment bank encounters a significant problem during its ETL process. The financial institution manages vast volumes of daily transactional data, and the ETL procedure is unexpectedly slow, ultimately affecting the efficiency of its financial reports. This is due to an increase in unstructured data and a rapid influx of high-volume real-time data that overwhelms the traditional ETV process infrastructure.

Subsequent data inconsistencies and inaccuracies resulted from the poor-quality 'raw' data being ingested into the data warehouse. Additionally, due to on-premises infrastructure limitations, the system lacks the scalability to handle bulky data.

The solution is deploying advanced ETL tools capable of handling big data, combined with strong data governance policies to ensure data quality at the source. In this scenario, a cloud-based solution can help overcome scalability issues, improving the ETL speed and, ultimately, the efficiency of financial reporting.

The Future of ETL

Expect a significant shift towards cloud-based ETL tools as companies leverage cloud technologies for data management and storage.

Integrating artificial intelligence and machine learning in ETL processes will revolutionize data extraction and processing, resulting in more efficient and accurate data insights. The addition of real-time ETL capabilities will also be more prevalent, facilitating instantaneous data extraction and analysis for real-time decision-making.

In the coming years, emphasis will be placed on resolving enduring ETL challenges such as handling large data volumes, streamlining complex transformations, and ensuring data security and privacy.

With the proliferation of data and businesses' increasing reliance on data-driven decision-making, ETL processes need to become more agile, secure, and efficient. Thus, we can anticipate the emergence of more sophisticated ETL tools and methodologies to meet these demands.

For more on ETL challenges, testing tools, and more, please subscribe to The CTO Club’s newsletter.