Best Neural Network Software Shortlist

Here's my pick of the 10 best software from the 19 tools reviewed.

Our one-on-one guidance will help you find the perfect fit.

Navigating the realm of data science, especially when forecasting intricate patterns or diving into computer vision projects, often requires powerful tools like Neural Designer. Harnessing the potential of both convolutional neural networks (CNN) and recurrent neural networks (RNN), this software stands as a beacon for those exploring deep neural networks.

Whether you're managing the intricacies of backpropagation and neuron activation or navigating the waters of Apache and startup ecosystems, it assists in adaptive data mining and sculpting predictive models with precision. From linear regression challenges to the nuances of reinforcement learning, I've found these types of neural networks to be a game-changer, addressing the very pain points many of us face in this vast landscape.

What Is Neural Network Software?

Neural network software serves as the foundational toolkit for creating, training, and deploying artificial neural networks (ANN), which are algorithms inspired by the structure and function of the human brain. Integrated to function across platforms from Windows and iOS to Linux and Android, Java API is integrated and it combines the precision of machine learning algorithms with the adaptability of scripting languages.

Utilized predominantly by data scientists, machine learning models, and researchers, this software facilitates tasks ranging from image, programming language, and speech recognition to predictive analytics and natural language processing. Through these tools, industries and professionals aim to harness vast amounts of data, draw insights, and automate complex tasks to drive innovation and efficiency.

Best Neural Network Software Summary

| Tool | Best For | Trial Info | Price | ||

|---|---|---|---|---|---|

| 1 | Best for neural networks in JavaScript environments | Not available | Pricing upon request | Website | |

| 2 | Best for modularity and quick experimentation | Not available | Pricing upon request | Website | |

| 3 | Best for online learning and training courses | Not available | From $15/user/month (min 5 seats) | Website | |

| 4 | Best for ensemble learning methodologies | Not available | Free to use | Website | |

| 5 | Best for scalable deep learning tools from Microsoft | Not available | Pricing upon request | Website | |

| 6 | Best for dynamic computation graph generation | Not available | Pricing upon request | Website | |

| 7 | Best for interactive deep learning visualization | Not available | Pricing upon request | Website | |

| 8 | Best for automated model building and selection | Not available | From $50/user/month (billed annually) | Website | |

| 9 | Best for modularity in deep learning frameworks | Not available | Pricing upon request | Website | |

| 10 | Best for AWS-integrated GPU acceleration | Not available | From $0.075/user/month (billed hourly based on EC2 instance type and usage) | Website |

-

Docker

Visit WebsiteThis is an aggregated rating for this tool including ratings from Crozdesk users and ratings from other sites.4.6 -

Pulumi

Visit WebsiteThis is an aggregated rating for this tool including ratings from Crozdesk users and ratings from other sites.4.8 -

GitHub Actions

Visit Website

Best Neural Network Software Reviews

Synaptic.js is an architecture-free neural network library for node.js and the browser. Its primary purpose is to enable the development and training of neural networks within a JavaScript environment. Given this design, it's perfectly positioned for those needing neural networks within JavaScript contexts.

Why I Picked Synaptic.js:

In evaluating various tools for neural networks, Synaptic.js stood out because of its dedication to the JavaScript ecosystem. What sets it apart is its architecture-free approach, giving developers the freedom to design and innovate. I determined that for JavaScript environments, Synaptic.js is a top choice.

Standout Features & Integrations:

Synaptic.js offers a variety of trainable architectures, including LSTM, feed-forward, and Hopfield networks. It also provides a built-in trainer method, easing the process of training networks. The tool smoothly integrates with popular JavaScript frameworks and node.js for backend implementations.

Pros and cons

Pros:

- Smooth integration with node.js and other JS frameworks

- Offers a range of trainable architectures

- Dedicated to JavaScript environments

Cons:

- Might be overkill for simple neural network tasks

- Documentation might be limited compared to more extensive libraries

- Requires a solid understanding of neural networks for optimal use

Keras has rapidly become a favorite in the deep learning community, providing an intuitive API for building and prototyping neural networks. Renowned for its modular nature, it offers the flexibility required for swift experimentation without the need for exhaustive coding.

Why I Picked Keras:

After comparing several deep learning libraries, my selection gravitated towards Keras because of its user-friendly design and its flexibility. It shines with its modular architecture, allowing developers like me to experiment without being bogged down by complex coding processes. Its aptitude for rapid prototyping makes it evident that Keras is best for modularity and quick experimentation.

Standout Features & Integrations:

At the heart of Keras is its modular design, enabling the easy stacking of layers and swift experimentation. Its tight integration with TensorFlow, Theano, and Microsoft Cognitive Toolkit (CNTK) ensures that users have the backend support necessary for comprehensive deep-learning tasks.

Pros and cons

Pros:

- Integration with major deep learning backends

- Modular design that facilitates swift experimentation

- User-friendly API for building neural networks

Cons:

- Dependency on backends like TensorFlow for some advanced features

- Can be less performant for very large-scale models

- Might require a learning curve for those new to deep learning

Knet is a powerful platform tailored to online learning, providing both educators and learners with a suite of tools to enhance the virtual education experience. Its emphasis on creating engaging and interactive training courses positions it as a standout in the online education sphere.

Why I Picked Knet:

When I was determining which platforms to recommend, Knet's focus on interactive learning experiences was a decisive factor. It stood apart with its innovative features that facilitate the creation and delivery of captivating training courses. From my perspective, its strengths in fostering online engagement and education are why I deem Knet best for online learning and training courses.

Standout Features & Integrations:

Knet offers a diverse range of features, from customizable course templates to real-time feedback tools. Its integrations with popular content management systems and video conferencing tools mean that educators can provide a rich and interactive learning environment.

Pros and cons

Pros:

- Real-time feedback capabilities

- Integration with leading content management systems

- Comprehensive tools for course creation

Cons:

- The interface might be less intuitive than some competitors

- Requires a minimum of 5 seats

- Might be overwhelming for first-time online educators

SuperLearner is a renowned R package developed to create and harness ensemble algorithms. These ensemble methods combine predictions from multiple models, enhancing the overall prediction's accuracy and robustness. This inherent focus on ensembling methodologies is precisely why SuperLearner is labeled as 'best for ensemble learning methodologies.'

Why I Picked SuperLearner:

In the vast array of machine learning packages, SuperLearner caught my eye because of its unwavering emphasis on ensembling techniques. After determining its capabilities and comparing it to alternatives, I judged SuperLearner as a prime choice for those prioritizing ensembling in their projects. The tool's dedication to combining multiple algorithms to produce a single, superior output is why it stands tall as the best for ensemble learning methodologies.

Standout Features & Integrations:

SuperLearner offers a wide range of algorithms from different R packages under its hood, providing users with a rich ensemble-building experience. The package integrates with many R-based algorithms, allowing for versatile model creation. Additionally, SuperLearner's API is designed to be user-friendly, easing the task of crafting complex ensembles.

Pros and cons

Pros:

- User-friendly API for ensemble creation

- Integration with various R-based algorithms

- Comprehensive ensemble methodologies

Cons:

- Requires a good understanding of ensemble methodologies for optimal results

- Can be resource-intensive with large datasets

- Limited to the R programming environment

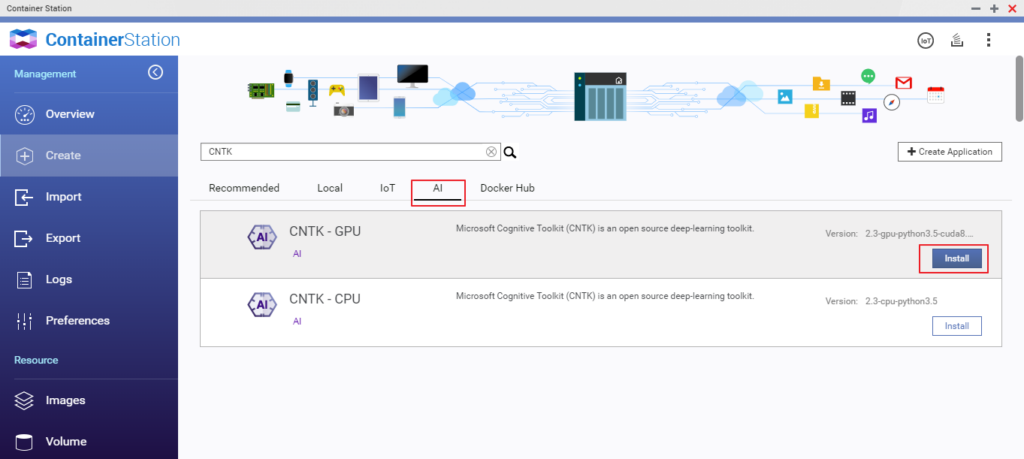

Microsoft Cognitive Toolkit, often known as CNTK, is a deep learning framework developed by Microsoft. It provides tools to realize and combine popular model types across multiple GPUs and servers. As a product from Microsoft, it stands out for those seeking scalable deep learning tools from this tech giant.

Why I Picked Microsoft Cognitive Toolkit:

When considering scalable deep learning tools, the credibility and robustness of Microsoft’s offerings immediately caught my attention. I chose the Cognitive Toolkit because of its capability to efficiently handle multiple GPUs and servers. Its Microsoft backing made it a definitive choice for scalable solutions.

Standout Features & Integrations:

The toolkit shines with its efficient handling of multiple GPUs, enabling fast model training. It also supports popular deep learning models and integrates with Microsoft's Azure cloud platform, allowing for efficient scaling and deployment.

Pros and cons

Pros:

- Azure integration for scaling and deployment

- Backed by Microsoft's extensive resources and research

- Optimized for multiple GPU handling

Cons:

- Some features might be over-engineered for small-scale applications

- Potential dependency on Microsoft's ecosystem

- Might be more complex for beginners

Neural network architectures can be both static and dynamic. Chainer differentiates itself by focusing on dynamic neural networks, known as 'define-by-run' networks. This approach allows for greater flexibility during the network's design and runtime adjustments, perfectly aligned with our 'best for dynamic computation graph generation' tag.

Why I Picked Chainer:

Chainer caught my attention due to its unique approach to neural network design. In the vast sea of neural network software, this differentiator made Chainer shine. Thus, if you're seeking dynamism and on-the-fly adjustments, Chainer is undoubtedly the best for dynamic computation graph generation.

Standout Features & Integrations:

Chainer boasts an intuitive interface that simplifies the creation of complex neural network architectures. With a plethora of pre-defined neural network layers and functions, users can quickly establish their desired models. Integration-wise, Chainer supports CUDA, ensuring that GPU computations, vital for deep learning tasks, are performed efficiently.

Pros and cons

Pros:

- Efficient GPU computation with CUDA support

- Extensive library of pre-defined layers and functions

- Dynamic 'define-by-run' architecture approach

Cons:

- Relatively fewer third-party extensions are available

- Less community support compared to some other frameworks

- The steeper learning curve for beginners

NVIDIA DIGITS stands out as a powerful, interactive tool tailored for visualizing and managing deep learning experiments. Its ability to visually represent intricate neural network structures and training processes makes it exceptional for those craving clear, interactive deep learning visualization.

Why I Picked NVIDIA DIGITS:

Navigating through the landscape of visualization tools, NVIDIA DIGITS quickly grabbed my attention. Its distinct flair for interactive visualization of deep learning processes played a pivotal role in my decision. Given its strengths in portraying deep learning in a visual format, it's clear to me that NVIDIA DIGITS is the best for those seeking interactive deep learning visualization.

Standout Features & Integrations:

NVIDIA DIGITS excels in offering users a real-time view of their deep learning models' training, complete with performance metrics. Its compatibility with popular deep learning frameworks like TensorFlow and Caffe ensures users can integrate their models effortlessly for visualization.

Pros and cons

Pros:

- Intuitive interface for managing experiments

- Compatibility with major deep learning frameworks

- Real-time visualization of model training

Cons:

- Larger datasets can sometimes slow visualization speeds

- May require specific hardware for optimal performance

- Predominantly tailored for NVIDIA GPU users

Neuton AutoML streamlines the machine learning process by offering automated solutions for building, training, and selecting optimal models. Its capability to autonomously pick the best models based on given datasets sets it apart in the vast landscape of machine learning tools.

Why I Picked Neuton AutoML:

Choosing a tool for automated model building wasn't straightforward, but Neuton AutoML caught my attention with its sophisticated yet straightforward approach. In my opinion, the way it simplifies complex machine-learning tasks by autonomously building and selecting models stands out. This unique capability convinced me that it's best suited for automated model building and selection.

Standout Features & Integrations:

Neuton AutoML is designed with features like automated feature engineering and data preprocessing, saving users significant time. Additionally, its integration with popular data platforms, including AWS and Google Cloud, ensures a smooth workflow.

Pros and cons

Pros:

- Optimized model selection based on datasets

- Integration with major data platforms

- Automated feature engineering and preprocessing

Cons:

- Limited support for niche or specialized models

- Requires some understanding of machine learning to leverage fully

- Pricing might be prohibitive for some users

Caffe, originating from the Berkeley Vision and Learning Center, offers a flexible framework for deep learning, prioritizing modularity and speed. Its modularity ensures developers can structure their neural network models in various ways, making it a fitting tool for diverse deep learning applications.

Why I Picked Caffe:

When comparing deep learning frameworks, Caffe's emphasis on modularity and speed greatly influenced my decision. I have observed its flexibility in building diverse neural network architectures. Based on its design and my experience, I believe Caffe is best for those prioritizing modularity in deep learning frameworks.

Standout Features & Integrations:

Caffe shines with its expressive architecture, allowing users to define, optimize, and innovate models without writing code. Its compatibility with various GPUs and integration with Python and MATLAB offers users a wide range of development options.

Pros and cons

Pros:

- Integration with Python and MATLAB for expanded development

- Compatibility with numerous GPUs for enhanced computation

- High modularity for diverse neural network architectures

Cons:

- Some features might require manual tuning for optimal performance

- Community support can be less extensive than larger frameworks

- Might have a steeper learning curve for beginners

When one thinks of leveraging the immense processing power of GPUs on the AWS platform, NVIDIA Deep Learning AMI often stands as the top choice. Designed by NVIDIA, this AMI marries AWS's cloud capabilities with GPU acceleration, echoing our assertion that it's 'best for AWS-integrated GPU acceleration.'

Why I Picked NVIDIA Deep Learning AMI:

The choice to spotlight NVIDIA Deep Learning AMI in this list resulted from careful comparison and judgment. This AMI distinguished itself through its integration with AWS among the cloud-ready GPU solutions. I'm of the strong opinion that NVIDIA's AMI is unparalleled by those seeking efficient GPU acceleration on AWS.

Standout Features & Integrations:

NVIDIA Deep Learning AMI comes pre-installed with many deep learning frameworks like TensorFlow, PyTorch, and MXNet, ensuring users can dive right into model development. On the integration front, its optimization for NVIDIA GPUs and tight-knit connection with AWS services like EC2 and S3 make data processing and model training a streamlined affair.

Pros and cons

Pros:

- Close integration with core AWS services

- Optimized for NVIDIA GPUs for swift processing

- Pre-installed with numerous deep learning frameworks

Cons:

- May require familiarity with AWS services for optimal utilization

- Can get expensive with prolonged GPU-intensive tasks

- Limited to the AWS ecosystem

Other Neural Network Software

Below is a list of additional neural network software that I shortlisted, but did not make it to the top. Worth checking them out.

- Swift AI

For data-driven business insights

- Google Cloud Deep Learning Containers

For Google Cloud integrated deep learning applications

- Clarifai

Good for visual recognition tasks and AI training

- Bitnami Pytorch

Good for deploying PyTorch on Kubernetes

- PyTorch

Good for dynamic computational graph handling

- TFLearn

Good for quick TensorFlow model building

- Merlin

Good for speech synthesis research

- DeepPy

Good for neural networks with Pythonic simplicity

- ConvNetJS

Good for deep learning in web browsers

Selection Criteria For Choosing Neural Network Software

The sheer number of options can be overwhelming when choosing deep learning and neural network software. I've dived deep into this realm, testing and evaluating dozens of these tools. My focus was not just on their popularity but also on specific functionalities crucial for researchers, developers, and enterprises. Let's delve into the criteria that are paramount when selecting such software.

Core Functionality:

- Model Building: The tool should enable easy construction of neural network architectures, whether they're feedforward, convolutional, recurrent, or others.

- Training: Robust training capabilities, including batch training and real-time data feeding.

- Evaluation: Assess the accuracy and performance metrics of the built models.

- Deployment: Capability to deploy trained models into production environments.

Key Features:

- Customizability: The ability to define custom layers, loss functions, and optimization strategies.

- Scalability: Efficient utilization of hardware, whether it's CPU, GPU, or TPU, and the potential to scale across multiple devices or nodes.

- Pre-trained Models: Availability of a repository of pre-trained models that can be fine-tuned for specific tasks.

- Visualization Tools: Tools to visualize training metrics, model architecture, and data samples.

- Regularization Techniques: Features to prevent over-fittings, such as dropout, early stopping, and weight constraints.

- Extensive Libraries: Comprehensive libraries that encompass a wide array of functions, classes, and pre-defined architectures.

Usability:

- Intuitive Design: The software should possess a clear and organized layout, ensuring that functionalities are easily accessible. For instance, data pre-processing tools should be streamlined and straightforward.

- Documentation & Tutorials: Comprehensive guides and examples that help new users grasp the fundamentals and advanced users fine-tune their expertise.

- Community Support: A vibrant community ensures that any doubts or issues faced are addressed promptly. Look for active forums, regular software updates, and a general buzz around the tool.

- Compatibility & Integrations: It should play well with other software, libraries, and tools in the ecosystem. For instance, if you're dealing with image data, easy integration with image recognition software can be invaluable.

- Role-Based Access: Especially important for enterprise solutions, where multiple stakeholders, from data scientists to business analysts, might need varying levels of access.

- Training & Onboarding Programs: For more complex solutions, having structured training sessions or a learning library can significantly smoothen the onboarding process.

Other Neural Network Software-Related Reviews

Summary

In the rapidly evolving landscape of neural network software, making the right choice is crucial. Our extensive exploration delved deep into the benefits, pricing structures, and unique selling propositions of several top tools. This journey has equipped us with valuable insights to aid any individual or organization in finding the right fit for their specific needs.

Key Takeaways:

- Tailor to Your Needs: While many tools offer a broad range of functionalities, it's essential to prioritize what aligns most with your specific use case. Whether it's scalability, customizability, or a particular set of features, select a tool that matches your immediate and future requirements.

- Invest in Usability: The best tools merge power with user-friendliness. Seek out software that not only offers rich features but also ensures a streamlined user experience, simplifying the complexities of neural network processes.

- Understand the Cost: Price isn't just about the amount but also the structure. Whether it's freemium, subscription-based, or usage-based, select a pricing model that offers flexibility and aligns with your budgetary constraints.

Most Common Questions Regarding Neural Network Software (FAQs)

What benefits can I expect from using the best neural network software?

- Efficiency: Top-tier software speeds up the process of designing, training, and deploying neural network models.

- Customizability: They offer flexible architectures allowing users to build models tailored to specific requirements.

- Scalability: As your data grows, these tools can leverage advanced hardware, ensuring models train faster and more efficiently.

- Comprehensive Libraries: Users get access to extensive libraries that cover various functions, architectures, and pre-trained models, streamlining the development process.

- Collaborative Features: Many of these tools foster collaboration, enabling teams to work cohesively on models and data.

How much do these neural network tools typically cost?

The pricing varies significantly based on features, capabilities, and the target audience. Enterprise solutions with extensive features might cost more, whereas basic tools targeting individuals or small teams may be cheaper or even free.

Can you explain the pricing models for these tools?

Certainly. Neural network software can adopt several pricing models:

- Freemium: Users get basic features for free and can upgrade to a premium version with more advanced features.

- Subscription-Based: Users pay a monthly or annual fee for access, often with different tiers offering varying features.

- Perpetual License: Users pay a one-time fee for continuous use of the software.

- Usage-Based: Costs are determined by the amount of computational resources or API calls used.

Is there a typical price range for these tools?

Yes, while prices can vary greatly, most neural network tools fall in the range of $10/user/month to $200/user/month for subscription-based models. Enterprise solutions might have custom pricing based on specific requirements.

Which is the cheapest software available?

There are several affordable tools, but TFLearn and DeepPy are among the more budget-friendly options, offering a good balance of features for their price.

Which software is the most expensive?

Enterprise-level tools, especially those tailored for large organizations with extensive requirements like Clarifai, tend to be on the pricier side.

Are there any free tools available?

Yes, several tools offer free versions or operate entirely for free. ConvNetJS and Merlin are examples of software that provide capabilities without cost, though they might lack some advanced features in paid tools.

What do you think?

Lastly, while I've taken great care to curate this list of neural network software, the tech landscape is ever-evolving. Perhaps you've come across a tool or software that deserves a mention. If so, I'd love to hear about it. Feel free to share your recommendations or experiences in the comments section or drop me a message. Your insights could be invaluable to our community and aid others in making informed decisions. Let's collaborate and keep this guide as comprehensive and up-to-date as possible.