Best Deep Learning Software Shortlist

Here's my pick of the 10 best software from the 27 tools reviewed.

Our one-on-one guidance will help you find the perfect fit.

Harnessing the power of artificial intelligence, deep learning software is your tool for solving intricate business problems. By leveraging high-performance computing frameworks and tutorials, even a startup can build convolutional and recurrent neural networks (RNN), enabling transformative image recognition capabilities.

Such an AI platform facilitates modularity with independent modules and predictive analytics, becoming a cornerstone for data mining efforts. Its "no code" features and handling big data problems using Spark make it easy to use while keeping your CPU usage efficient. Working with nodes or learning applications like Javascript has never been more straightforward, enabling you to easily navigate the world of regression and predictive analytics.

What is Deep Learning Software?

Deep Learning Software refers to a subset of AI applications designed to mimic the workings of the human brain in processing data and creating patterns for decision-making. These advanced tools are utilized by data scientists, machine learning engineers, researchers, and a range of industries, like healthcare, finance, and retail, to tackle complex challenges. These tools utilize Java, CUDA, Python, Fortran, C/C++, etc. Moreover, they can work with iOS, Android, Windows, Linux, etc.

From image and speech recognition to natural language processing, Deep Learning Software enables these users to construct artificial neural networks, handle vast datasets, and extract valuable insights that lead to strategic actions. These applications significantly predict trends, enhance customer experience, enable autonomous vehicles, and even facilitate drug discovery, demonstrating their vast potential and applicability.

Best Deep Learning Software Summary

| Tool | Best For | Trial Info | Price | ||

|---|---|---|---|---|---|

| 1 | Best for experiment-driven machine learning development | Not available | From $39/user/month (billed annually) | Website | |

| 2 | Best for automated data labeling and annotation in AI | Not available | From $49/user/month | Website | |

| 3 | Best for managing, automating, and accelerating ML workflows | 14-day free trial | Pricing upon request | Website | |

| 4 | Best for image analysis with deep learning algorithms | Not available | From $250/user/month | Website | |

| 5 | Best for intuitive AI model creation with visual interface | Free trial available | From $29/user/month | Website | |

| 6 | Best for quick prototyping and production of neural networks | Not available | Pricing upon request | Website | |

| 7 | Best for easy integration of machine learning into business operations | Not available | From $59/user/month | Website | |

| 8 | Best for symbolic and numerical computation in deep learning | Not available | From $25/user/month (billed annually) | Website | |

| 9 | Best for improving learning outcomes with AI-driven adaptive learning | Not available | Pricing upon request | Website | |

| 10 | Best for leveraging powerful GPU-accelerated AI and Deep Learning tools | Not available | From $0.09/GPU/hour (billed monthly) | Website |

-

Docker

Visit WebsiteThis is an aggregated rating for this tool including ratings from Crozdesk users and ratings from other sites.4.6 -

Pulumi

Visit WebsiteThis is an aggregated rating for this tool including ratings from Crozdesk users and ratings from other sites.4.8 -

GitHub Actions

Visit Website

Best Deep Learning Software Reviews

Comet enables data scientists to track, compare, explain, and optimize their machine-learning models and experiments. Its functionality aligns perfectly with an experiment-driven approach, where monitoring and comparing results is pivotal.

Why I Picked Comet:

I found Comet to stand out in machine learning development for its focus on experiment management. Its robustness in tracking, comparing, and managing ML experiments makes it a top pick. The ability to handle numerous experiments simultaneously while maintaining clear, organized records underpins its claim as the best tool for experiment-driven machine learning development.

Standout features and integrations:

Comet shines with features like real-time performance visualizations, code tracking, and automated experiment tracking. These features simplify managing and tracking experiments, driving more focused and effective machine learning development. Moreover, Comet integrates well with popular libraries such as Keras, PyTorch, and TensorFlow, which broadens its utility and eases workflow.

Pros and cons

Pros:

- Broad compatibility with popular libraries

- Effective performance visualizations

- Robust experiment management

Cons:

- Limited offline capabilities

- UI can have a steep learning curve

- Pricing may be steep for small teams

Labellerr is a specialized platform that accelerates AI model training through automatic data labeling and annotation. Its key strength is assisting AI teams in handling vast amounts of data with precision.

Why I Picked Labellerr:

I chose Labellerr mainly due to its prowess in the crucial task of data labeling and annotation. Its automation capabilities significantly reduce the effort and time involved in this process, making it a stand-out choice for AI projects that deal with substantial data. For this reason, Labellerr is the best for automating data labeling and annotation in AI.

Standout features and integrations:

Labellerr boasts multi-format data labeling, innovative annotation tools, and project management tools that aid in team collaboration. It also integrates smoothly with popular data storage and machine learning platforms like Amazon S3, Google Cloud, Microsoft Azure, and more, simplifying the overall workflow.

Pros and cons

Pros:

- Robust project management tools

- Supports various data formats

- Efficient automation of data labeling

Cons:

- Limited flexibility in certain workflows

- Could be complicated for beginners

- May not be cost-effective for smaller projects

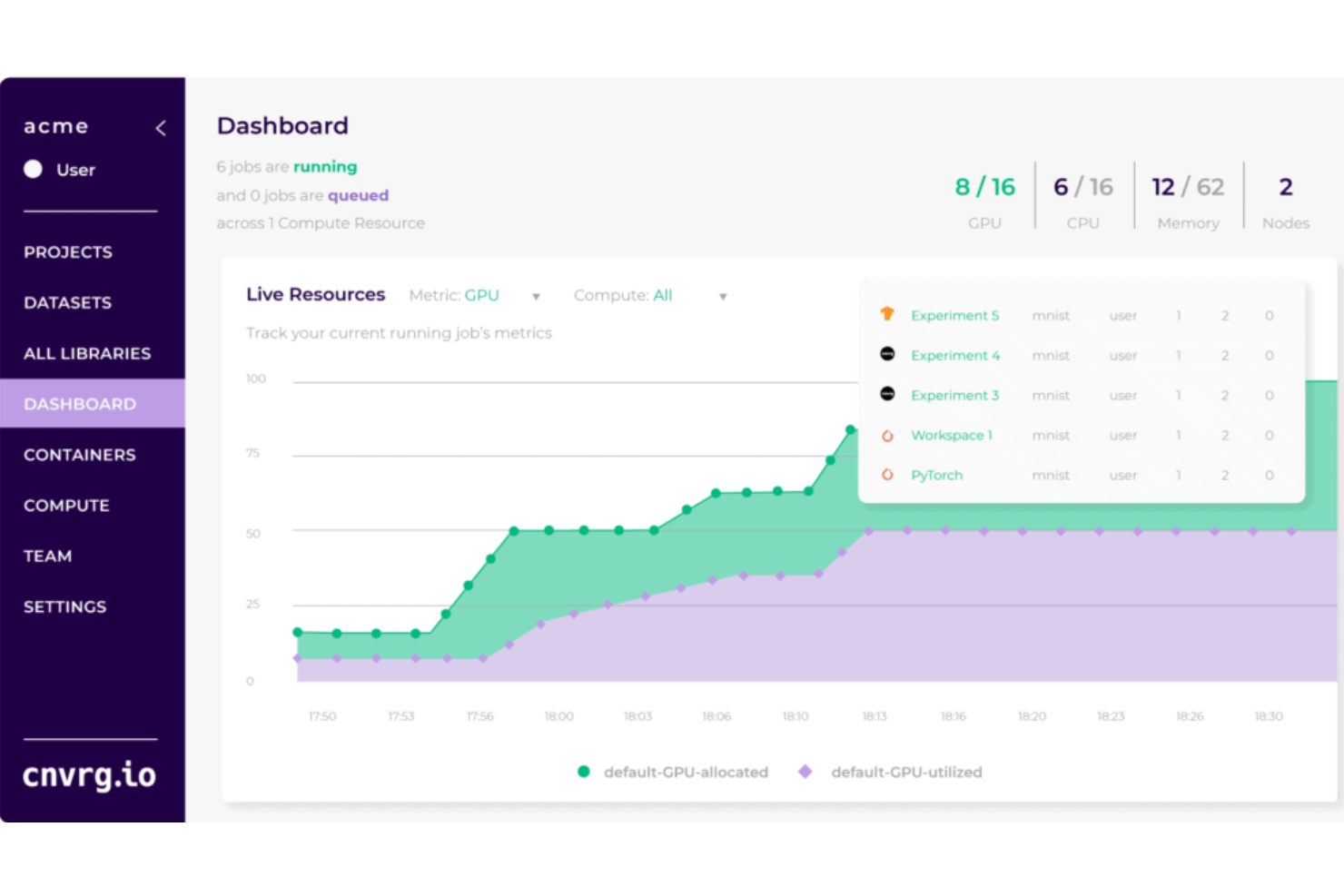

cnvrg.io is an end-to-end machine-learning operations (MLOps) platform designed to help data scientists and engineers automate machine learning from research to production. Its strengths lie in managing, automating, and accelerating machine-learning workflows, making it an optimal choice for teams looking to streamline their machine-learning projects.

Why I Picked cnvrg.io:

I chose cnvrg.io for this list due to its comprehensive approach to managing machine-learning workflows. It allows for the automation of repetitive tasks, enabling data scientists to focus on model development and improvement. Regarding managing, automating, and accelerating ML workflows, cnvrg.io has a proven track record.

Standout features and integrations:

cnvrg.io provides robust features like workflow automation, model management, versioning, and auto-scaling. Additionally, it offers integration capabilities with popular tools like Jupyter Notebooks, RStudio, TensorFlow, and PyTorch, providing users with an excellent working environment.

Pros and cons

Pros:

- Emphasis on automation, freeing up data scientists for more complex tasks

- Robust integration capabilities

- Comprehensive management of ML workflows

Cons:

- Customization options could be more robust

- Might have a learning curve for new users

- Pricing transparency could be improved

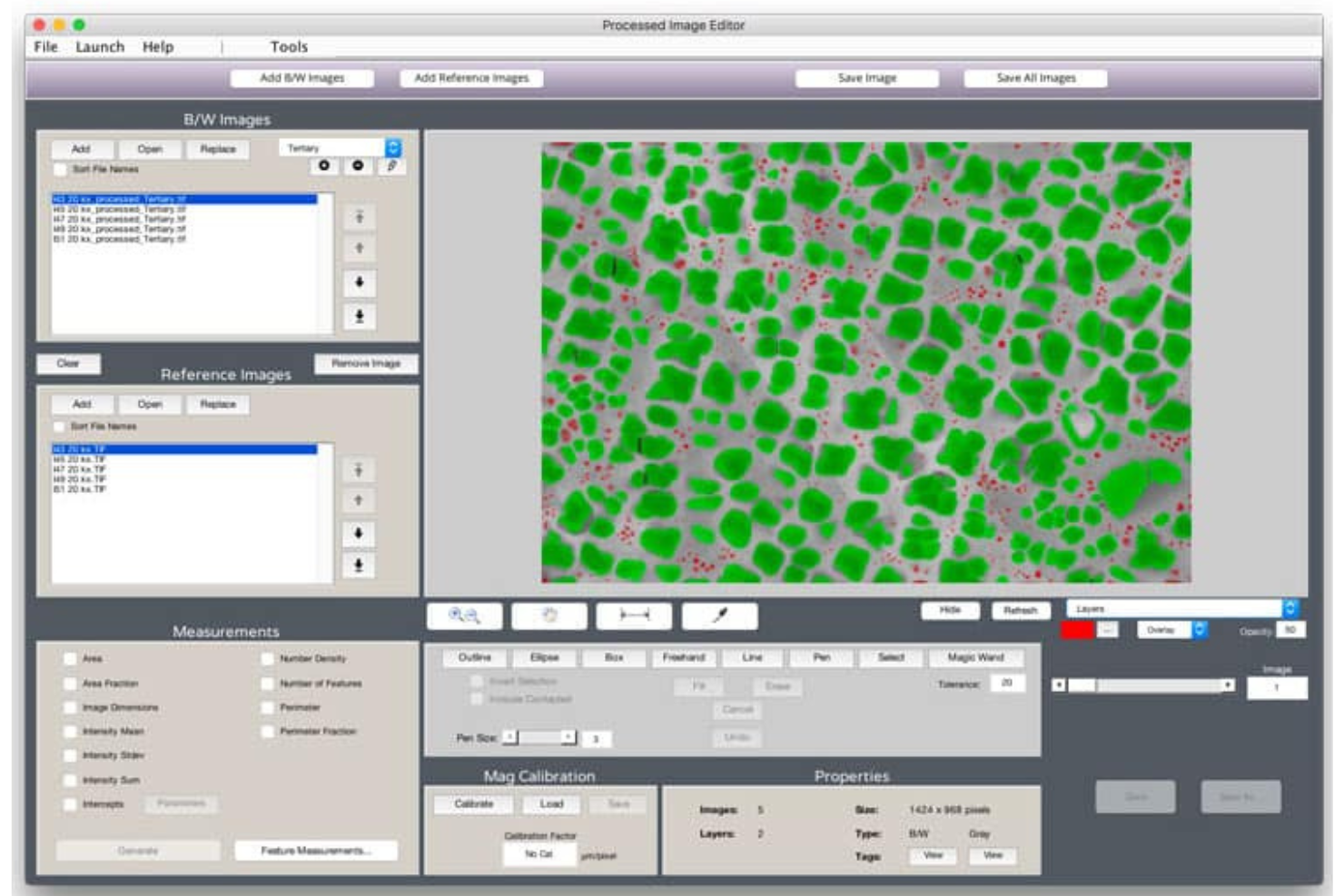

MIPAR is a powerful, quantitative image analysis software specifically designed to allow users to extract and interpret information from various image types. With its deep learning algorithms, MIPAR performs intricate image analysis tasks, making it a perfect tool for complex image analysis projects.

Why I Picked MIPAR:

I chose MIPAR for this list because of its capability to handle complex image analysis tasks with deep learning algorithms. The tool's sophistication and robustness in image analysis led to my decision. Moreover, its capacity to handle various image types sets it apart from similar devices. MIPAR is the best tool for image analysis with deep learning algorithms due to these attributes.

Standout features and integrations:

MIPAR offers a variety of features, such as automatic defect detection, particle analysis, and tissue segmentation, among others. It also integrates well with other image capture and imaging platforms, providing a comprehensive image analysis solution.

Pros and cons

Pros:

- Integrates well with other imaging platforms

- Can deal with a variety of image types

- Handles complex image analysis tasks effectively

Cons:

- Relatively fewer resources and community for problem-solving

- Higher pricing compared to some other tools

- Steep learning curve for beginners

Cauliflower is a user-friendly platform facilitating AI model creation through a distinctive visual interface. Its design-centric approach enables experts and beginners to build models intuitively, proving its worth for users who prioritize user-friendliness and simplicity.

Why I Picked Cauliflower:

Cauliflower intrigued me with its clean, easy-to-navigate visual interface in the realm of AI model creation tools. The value of a tool that lowers the barrier of entry to AI cannot be understated, and Cauliflower achieves this by facilitating intuitive model building. It’s this simplicity and accessibility that make it the best choice for those who value an intuitive visual interface for AI model creation.

Standout features and integrations:

Cauliflower's most notable feature is its drag-and-drop model creation. This, paired with an interactive data exploration tool and automatic feature engineering, makes it a joy to work with. It supports connection with SQL databases, Google Sheets, and Excel for integrations, making it highly versatile for different data sources.

Pros and cons

Pros:

- Automatic feature engineering

- Integrations with popular data sources

- User-friendly visual interface

Cons:

- Pricing may be steep for individual users

- Does not support all machine learning algorithms

- Limited options for advanced users

Keras is a high-level neural networks API, designed for enabling fast experimentation with deep neural networks. It's built on top of TensorFlow and designed to be user-friendly, modular, and extensible. Given its design and functionality, Keras is ideal for fast prototyping and production of neural network models.

Why I Picked Keras:

Keras is my choice for this list because of its emphasis on user-friendliness and speed. Its intuitive API design accelerates the process from concept to result, facilitating rapid prototyping. Moreover, its ability to handle complex neural network architectures sets it apart from other similar tools. That's why I selected Keras as the best tool for quick prototyping and production of neural networks.

Standout features and integrations:

Keras' major features include accessible model building, comprehensive preprocessing layers, and powerful debugging tools. Its broad set of integrations with lower-level deep learning languages, like TensorFlow and Theano, allow it to be flexible and extensible, making it fit well in almost any machine learning workflow.

Pros and cons

Pros:

- Comprehensive set of tools and features

- Extensible and highly modular

- User-friendly, enabling rapid prototyping

Cons:

- Requires understanding of underlying platforms for optimization and debugging

- Can be less efficient for models with multiple inputs/outputs

- For very specific tasks, lower-level APIs may offer more control

Prime AI is a machine learning software that blends AI into your business operations. It's developed with a focus on easy integration, helping companies streamline their operations with the power of AI.

Why I Picked Prime AI:

I selected Prime AI for its impressive focus on business integration. Its user-friendly interface, coupled with a robust set of tools, facilitates the incorporation of machine learning into diverse business processes. These features make Prime AI the optimal choice for businesses seeking to integrate machine learning into their operations effortlessly.

Standout features and integrations:

Prime AI stands out with features such as pre-trained models for a quick start, custom model training, and explainable AI that makes the decision process transparent. Moreover, it offers valuable integrations with familiar data sources and applications, including SQL databases, Google Analytics, Salesforce, and more, aiding in a smooth workflow.

Pros and cons

Pros:

- Wide range of integrations

- Comprehensive support for custom model training

- Pre-trained models for quick integration

Cons:

- Steep learning curve for non-technical users

- Limited options for very specialized tasks

- Pricing could be high for small businesses

Wolfram Mathematica is a sophisticated computational software enabling users to perform complex mathematical computations, including symbolic and numerical ones. It's widely used in deep learning and AI research and development for its precise computation capabilities.

Why I Picked Wolfram Mathematica:

I selected Wolfram Mathematica for this list because of its unique strength in handling symbolic and numerical computations, essential components in deep learning operations. Its power to execute complex mathematical calculations with high precision sets it apart. Wolfram Mathematica is the top choice for tasks requiring advanced computations in deep learning due to its unparalleled computational capabilities.

Standout features and integrations:

Wolfram Mathematica shines with its vast features, including advanced numerical solving, symbolic computation, data visualization, and algorithm development. Moreover, it integrates with several other data analysis tools and platforms, making it a comprehensive computational tool for deep learning tasks.

Pros and cons

Pros:

- Offers integration with other data analysis platforms

- Provides a wide array of computational tools and features

- Exceptional symbolic and numerical computation capabilities

Cons:

- Requires a steep learning curve for optimal usage

- Higher cost in comparison to some other computational tools

- The interface might be overwhelming for new users

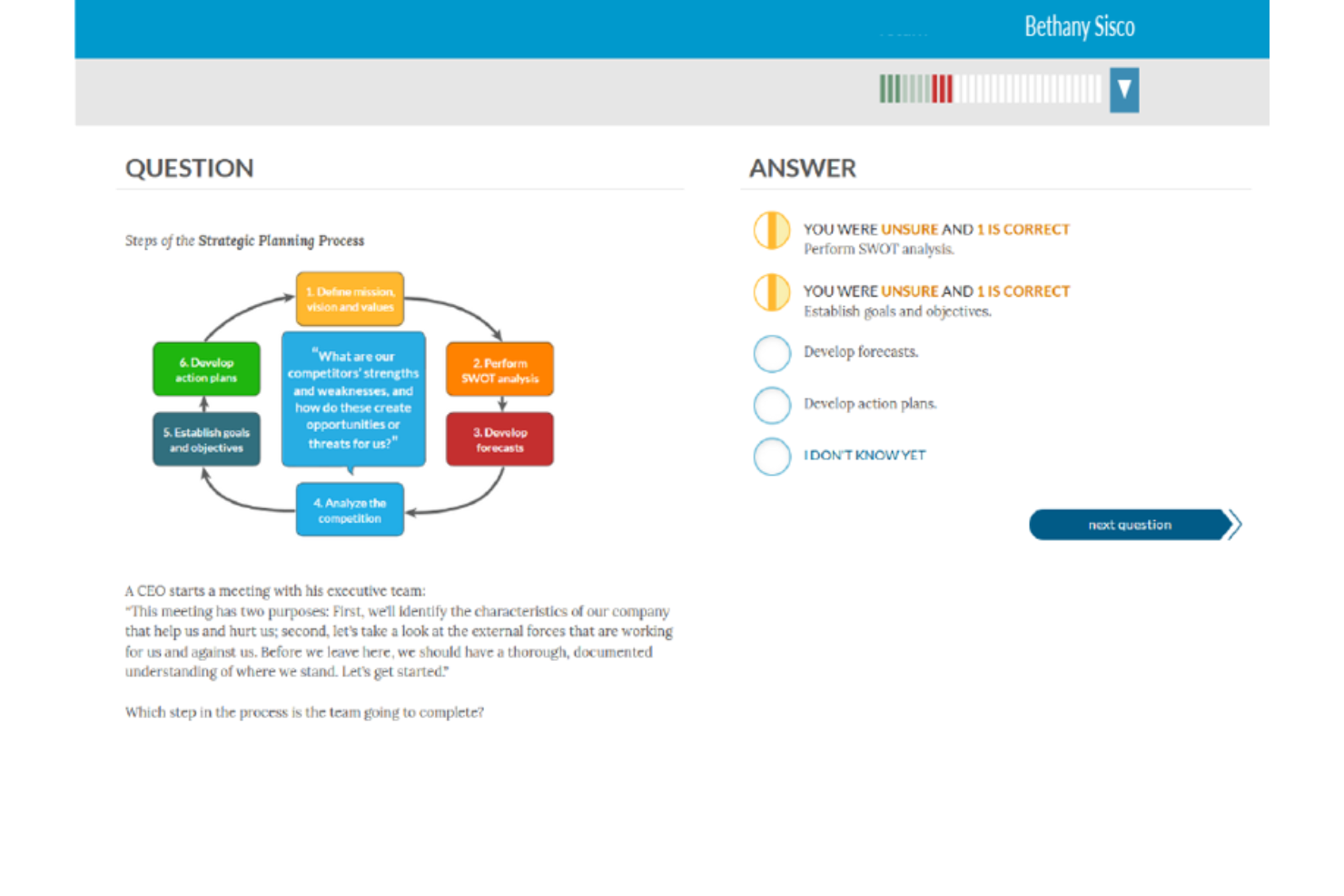

Amplifire is an advanced learning platform that employs AI-driven adaptive learning methods to enhance user learning outcomes. It focuses on identifying and correcting knowledge gaps and misconceptions, which directly correlates to its proficiency in improving learning outcomes.

Why I Picked Amplifire:

I selected Amplifire for this list due to its unique approach to learning enhancement. The platform stands out with its AI-driven adaptive learning methods that accurately identify and address learners' gaps in knowledge. This improves learning outcomes, making it a powerful tool in educational and corporate training environments.

Standout features and integrations:

Amplifire offers features like knowledge gap identification, AI-driven personalized learning paths, and performance tracking. It integrates with various learning management systems (LMS) like Moodle, Blackboard, and Canvas, allowing users to incorporate Amplifire's features into their learning environments.

Pros and cons

Pros:

- Integrates with various learning management systems

- Identifies and addresses knowledge gaps

- Uses AI-driven methods to enhance learning outcomes

Cons:

- Could offer more customization options for unique learning environments

- May require training to maximize its features

- Pricing details are not transparent

Best for leveraging powerful GPU-accelerated AI and Deep Learning tools

The NVIDIA GPU Cloud (NGC) is a cloud-based platform that provides access to a comprehensive catalog of GPU-accelerated software for AI, machine learning, and HPC. NGC makes it easy for researchers and data scientists to develop, test, and deploy AI and HPC applications with pre-integrated GPU-accelerated software.

Why I Picked NVIDIA GPU Cloud (NGC):

I picked NGC due to its powerful computational capabilities, which utilize NVIDIA's top-tier GPU support technology. What distinguishes NGC is its catalog of GPU-optimized software for deep learning and machine learning and its ability to dramatically reduce the time it takes to deploy AI applications. Due to its pre-integrated, containerized software, NGC is best for leveraging GPU-accelerated AI and deep learning tools.

Standout features and integrations:

NGC's main features include a wide range of pre-trained models, performance-engineered containers, and industry-specific SDKs. The platform also integrates with major cloud providers, such as AWS, Azure, and Google Cloud, which makes deploying models to these services straightforward.

Pros and cons

Pros:

- Integration with major cloud providers

- Broad selection of pre-trained models

- Powerful GPU-accelerated software

Cons:

- Requires knowledge of NVIDIA’s ecosystem

- May be overkill for smaller projects or businesses

- The cost can add up quickly with heavy usage

Other Deep Learning Software

Below is a list of additional deep learning software that I shortlisted, but did not make it to the top. Definitely worth checking them out.

- Torch

For advanced algorithm development with extensive libraries

- Appen

For accessing large-scale, diverse human-annotated datasets

- Industrytics

Good for enabling predictive maintenance in industrial environments

- Intel Deep Learning Training Tool

Good for accelerating deep learning model training on Intel hardware

- Caffe

Good for fast prototyping of deep learning models

- Môveo AI

Good for optimizing logistics and supply chain operations

- Aporia

Good for monitoring and explaining AI models in production

- Lt for labs

Good for improving lab efficiency with machine learning

- Fixzy Assist

Good for improving maintenance processes with predictive AI

- Diffgram

Good for improving data labeling and annotation in machine learning projects

- PaperEntry

Good for automating data entry and digitizing paperwork

- Machine Learning on AWS

Good for deploying scalable machine learning solutions in the cloud

- DataRobot

Good for automating machine learning model building and deployment

- Neural Designer

Good for simplifying complex data analytics with neural networks

- Cognex ViDi Suite

Good for quality inspection with deep learning-based machine vision

- Valohai

Good for managing end-to-end machine learning pipelines

- Lityx

Good for advanced analytics and marketing automation

Selection Criteria for Choosing Deep Learning Software

I've spent significant time researching and personally testing machine learning tools, putting them through their paces in a variety of scenarios. During this rigorous process, I've evaluated numerous tools, focusing on certain key criteria that are essential for anyone considering adopting this type of software.

Core Functionality

A good machine-learning tool should provide:

- Ability to handle various data types (structured, unstructured, etc.)

- Support for various machine learning algorithms

- Capabilities to train, test, and deploy models

- Flexibility in model tuning and optimization

Key Features

These are the features that, I believe, make a significant difference in a machine-learning tool:

- Automated Machine Learning (AutoML): The tool should offer some level of automation to simplify the model-building process.

- Model Interpretability: Users need to understand how a model is making predictions, hence the tool should offer features to interpret model behavior.

- Scalability: As the size and complexity of data increase, the tool should be able to scale accordingly.

- Data Preprocessing: The tool should provide functionalities to clean, transform, and manipulate data, as these steps are critical in the machine learning pipeline.

- Integration: The tool should integrate with various data sources and other software tools.

Usability

For a machine learning tool to be effective, it should offer:

- A user-friendly interface that allows users to easily navigate and operate the tool, regardless of their level of technical expertise.

- Smooth onboarding and support so that users can quickly get up to speed and have access to help when needed.

- Comprehensive documentation and resources for self-learning, as machine learning can be complex, users may need to refer to additional material.

- Flexibility in programming languages is supported, as users may have different preferences and skill sets.

- Role-based access control is significant in teams where different individuals may have different access levels based on their roles and responsibilities.

Most Common Questions Regarding Deep Learning Software (FAQs)

What are the benefits of using deep learning software?

The benefits of using deep learning software are numerous. Tools like image recognition software can help automate security processes, improving efficiency and safety. They can help to make sense of large, complex datasets, revealing patterns and relationships that might not be otherwise noticeable.

Additionally, deep learning software can be used to create predictive models that can help organizations make more informed decisions. Furthermore, these tools can be integrated with other systems to provide comprehensive solutions. Lastly, many of these tools come with robust support and learning resources, which can be highly beneficial for those new to the field.

How much do these deep learning tools typically cost?

Pricing for deep learning software can vary greatly depending on the tool’s complexity and the organization’s size. Some platforms may offer free versions or trials, while others may have pricing models that start from around $50 per month and can go up into the thousands for more advanced features and capabilities.

What are the standard pricing models for deep learning software?

Pricing models for deep learning software typically fall into three categories:

- Per user: This is where the cost depends on the number of users using the software.

- Per project: Some software charges are based on the number of projects.

- Enterprise: For larger organizations, software providers usually offer a custom pricing model based on the specific needs and scale of the business.

What is the range of pricing for deep learning software?

The cost of deep learning software can start from as low as $20 per month for more straightforward tools to over $1000 per month for more sophisticated solutions. However, the price largely depends on the user’s specific needs, such as the volume of data to be processed, the level of customization required, and the level of support needed.

What are some of the cheapest and most expensive deep learning software?

Tools like Diffgram and Caffe offer basic functionalities on the cheaper end of the scale and can be a good starting point for beginners. On the more expensive end, tools like Datarobot and Industrytics provide advanced capabilities geared toward larger organizations or more complex projects.

Are there any free deep-learning tools available?

Yes, several deep learning tools offer free versions. Caffe is an open-source deep-learning framework that is entirely free to use. Similarly, AWS provides free tier for its machine learning services, although this does come with certain usage limits.

Other Deep Learning Software-Related Reviews

Key Takeaways

Selecting the best Deep Learning Software requires an understanding of your unique needs and the specific features that will cater to those needs. Factors such as the core functionality, key features, usability, and pricing play a significant role in determining the ideal fit for your organization.

- Core Functionality: Deep learning software should be able to perform tasks such as data preprocessing, model training, evaluation, and inference efficiently. The tool should offer features that help handle large data sets, train deep learning models, and deploy models into production.

- Key Features: Look for features like automation capabilities, scalability, data visualization, and a strong support community. These features can drastically enhance the efficiency and effectiveness of your deep learning processes.

- Pricing and Usability: The pricing model should align with your budget while offering a fair balance between cost and features. The tool's usability, user interface, ease of onboarding, customer support, and documentation can significantly influence the user experience and productivity.

Want More?

There are many noteworthy resources for the best artificial intelligence platforms, including insightful books, AI conferences, and entertaining podcasts to brush up on your knowledge.

For more on artificial intelligence platforms and tools, subscribe to The CTO Club’s newsletter to stay up to date with the latest in generative AI.