Best Open Source ETL Tools Shortlist

Here’s my shortlist of the best open source ETL tools:

Our one-on-one guidance will help you find the perfect fit.

Managing data across multiple systems is messy — inconsistent formats, integration failures, and data loss can slow down your team and affect decision-making. Open-source ETL tools can help by automating data extraction, transformation, and loading so your data stays clean, accurate, and accessible.

I've tested and reviewed the best options out there, focusing on real-world performance and ease of use. This guide will help you find the right tool to simplify your data pipeline and make data work for your team — without wasting time on trial and error.

Why Trust Our Software Reviews

We’ve been testing and reviewing SaaS development software since 2023. As tech experts ourselves, we know how critical and difficult it is to make the right decision when selecting software. We invest in deep research to help our audience make better software purchasing decisions.

We’ve tested more than 2,000 tools for different SaaS development use cases and written over 1,000 comprehensive software reviews. Learn how we stay transparent & check out our software review methodology.

Best Open Source ETL Tools Summary

This comparison chart summarizes pricing details for my top open source ETL tools selections to help you find the best one for your budget and business needs.

| Tool | Best For | Trial Info | Price | ||

|---|---|---|---|---|---|

| 1 | Best for complex data tasks | Free trial available | From $5,500/unit/year | Website | |

| 2 | Best for real-time data streaming | Free plan available | Free | Website | |

| 3 | Best for data transformation | 30-day free trial | From $4/user/month | Website | |

| 4 | Best for data extraction scripts | Not available | Free to use | Website | |

| 5 | Best for automated data integration | 14-day free trial + free demo | From $239/month | Website | |

| 6 | Best for scalable ETL solutions | Free plan available | Free | Website | |

| 7 | Best for simple ETL scripting | Not available | Free to use | Website | |

| 8 | Best for big data integration | 14-day trial available | Pricing upon request | Website | |

| 9 | Best for integration patterns | Not available | Free to use | Website | |

| 10 | Best for data flow automation | Not available | Free to use | Website |

-

Docker

Visit WebsiteThis is an aggregated rating for this tool including ratings from Crozdesk users and ratings from other sites.4.6 -

Pulumi

Visit WebsiteThis is an aggregated rating for this tool including ratings from Crozdesk users and ratings from other sites.4.8 -

GitHub Actions

Visit Website

Best Open Source ETL Tool Reviews

Below are my detailed summaries of the best open source ETL tools that made it onto my shortlist. My reviews offer a detailed look at the key features, pros & cons, integrations, and ideal use cases of each tool to help you find the best one for you.

CloverDX is a data integration platform that serves business users and IT teams by automating, orchestrating, and transforming data. It supports various deployment options, making it versatile for different business needs.

Why I picked CloverDX: CloverDX is tailored for complex data tasks with its intuitive interface and versatile deployment options, including on-premise and cloud services like AWS, Azure, and Google Cloud. It offers data services for API access and collaboration tools, ensuring your team can work efficiently across different environments. The inclusion of a data catalog provides reliable data access, which is crucial for maintaining data integrity. These features make CloverDX a standout choice for teams dealing with intricate data processes.

Standout features & integrations:

Features include an intuitive interface for business users, data services for API access, and a data catalog for reliable data access. These elements ensure you can manage and access data efficiently. The platform also offers collaboration tools to enhance teamwork.

Integrations include AWS, Azure, Google Cloud, Snowflake, Salesforce, Microsoft SQL Server, Oracle, PostgreSQL, MongoDB, and Kafka.

Pros and cons

Pros:

- Strong API access capabilities

- Versatile deployment options

- Supports complex data processes

Cons:

- Requires technical expertise

- Potentially steep learning curve

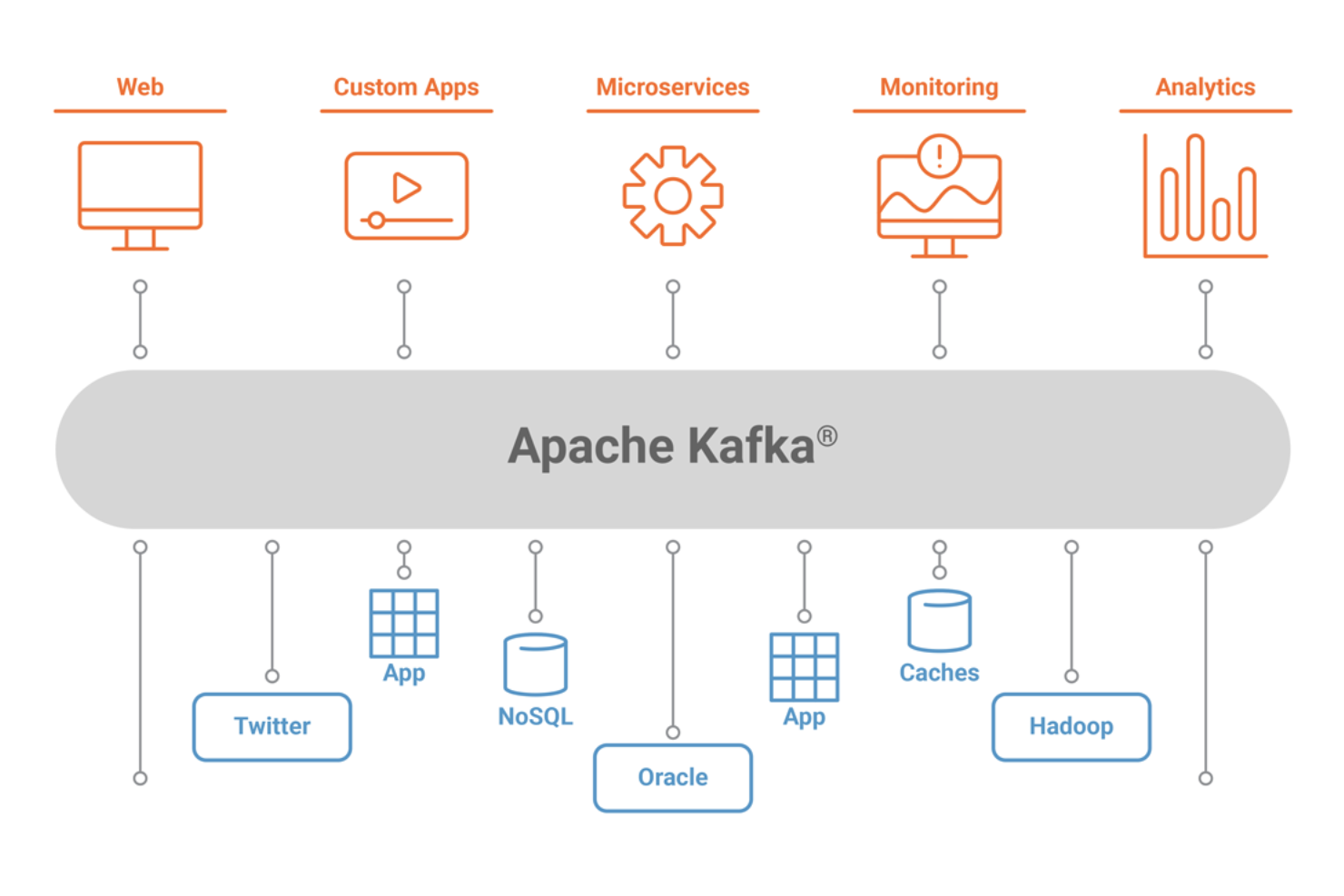

Apache Kafka is a distributed event streaming platform used by developers and enterprises to build real-time data pipelines and streaming applications. It's designed to handle large volumes of data quickly and efficiently, making it ideal for businesses that require real-time data processing.

Why I picked Apache Kafka: It's designed for real-time data streaming, supporting high-throughput and low-latency processing, which is essential for modern data-driven applications. Kafka's distributed architecture ensures high availability and fault tolerance, so your data is always accessible. The platform's scalability allows you to handle growing data needs without compromising performance. Kafka also offers strong durability guarantees, ensuring data integrity over time.

Standout features & integrations:

Features include a distributed architecture that ensures high availability, built-in data replication for fault tolerance, and a robust messaging system for scalable data processing. These features make it well-suited for handling large volumes of data efficiently. Kafka's log-based storage system ensures data durability and reliability.

Integrations include Confluent, AWS, Azure, Google Cloud, MongoDB, Cassandra, Elasticsearch, Splunk, Hadoop, and MySQL.

Pros and cons

Pros:

- Strong data durability

- Low-latency processing

- Handles high-throughput data

Cons:

- Configuration can be challenging

- Limited built-in monitoring

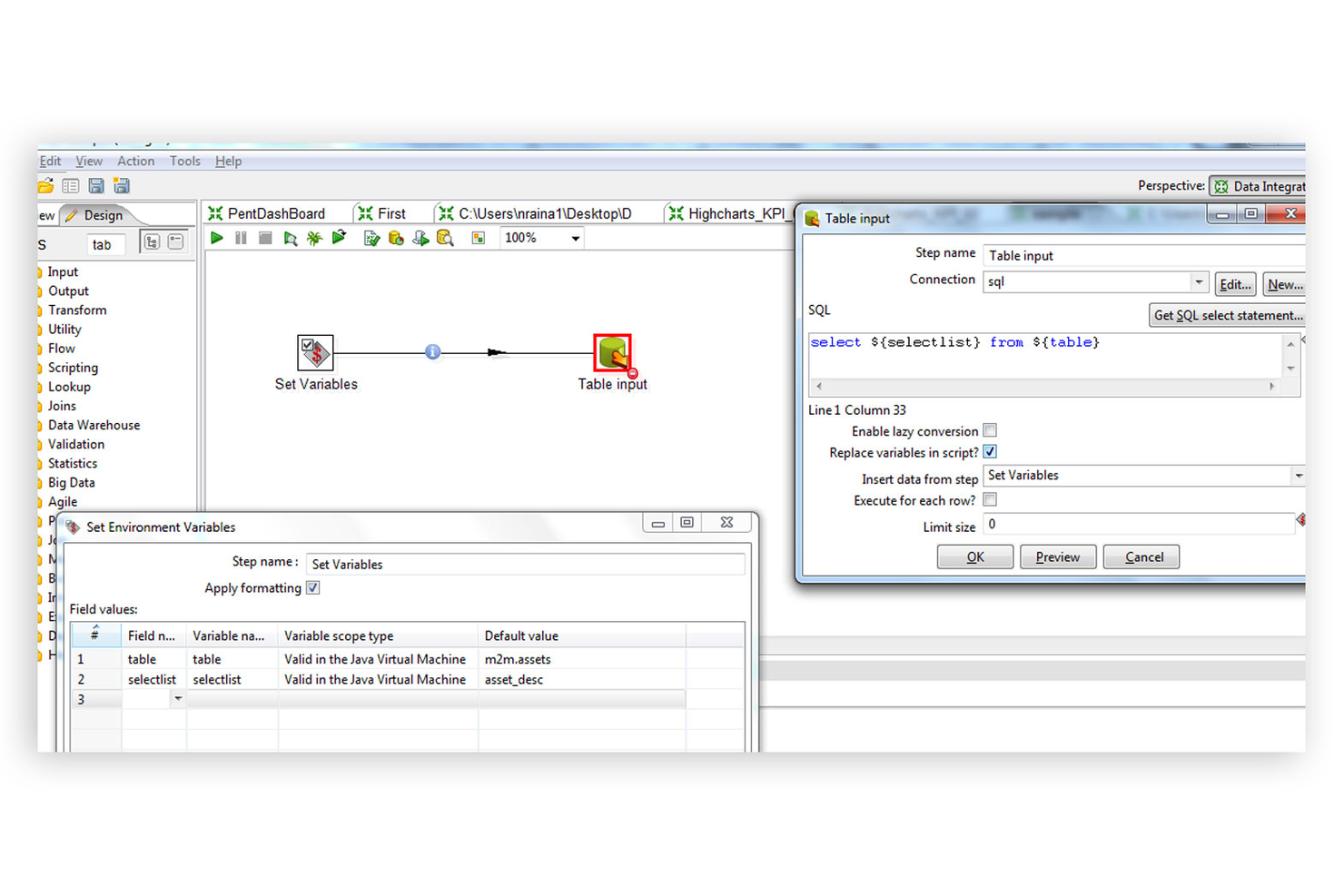

Pentaho Kettle is an open-source ETL tool that caters to data engineers and business analysts needing to perform complex data transformations. It provides a visual interface for designing data pipelines, making it easier to manage data flows and execute transformations efficiently.

Why I picked Pentaho Kettle: It's known for its data transformation capabilities, providing a visual drag-and-drop interface that simplifies the creation of complex workflows. Kettle offers extensive support for various data sources, ensuring your team can integrate data from multiple origins. Its graphical interface reduces the need for extensive coding, which is beneficial for teams with limited programming expertise. The tool's flexibility in handling different data types and formats makes it a versatile choice for diverse data needs.

Standout features & integrations:

Features include a visual drag-and-drop interface that simplifies workflow design, allowing you to build complex data transformations without coding. The tool supports a wide array of data sources, making integration straightforward. Kettle's flexibility in handling multiple data types and formats ensures compatibility with diverse data environments.

Integrations include Oracle, MySQL, PostgreSQL, Microsoft SQL Server, MongoDB, Amazon Redshift, Google BigQuery, Salesforce, SAP, and Hadoop.

Pros and cons

Pros:

- Extensive data source support

- Handles diverse data types

- Visual interface for transformations

Cons:

- Initial setup complexity

- Can be resource-intensive

Singer is an open-source ETL tool that caters to data engineers and developers looking to create custom data extraction scripts. It provides a simple yet effective way to move data between databases, web APIs, files, and other sources, ensuring flexibility and control over data flows.

Why I picked Singer: It's known for its data extraction scripts, which allow you to customize how data is pulled from different sources. Singer uses a simple command-line interface, making it straightforward to automate tasks. The tool employs a modular approach with reusable components, so you can tailor data pipelines to fit your needs. Its focus on simplicity and flexibility makes it a great choice for teams that require specific data handling capabilities.

Standout features & integrations:

Features include a simple command-line interface that makes automation easy. The tool's modular approach allows you to create reusable components, saving time and effort. Singer's flexibility in handling various data sources ensures you can customize your data flows to meet specific requirements.

Integrations include PostgreSQL, MySQL, Salesforce, Google Analytics, Redshift, Slack, Snowflake, MongoDB, Marketo, and HubSpot.

Pros and cons

Pros:

- Modular and reusable components

- Simple command-line interface

- Customizable data extraction

Cons:

- No graphical interface

- Requires scripting knowledge

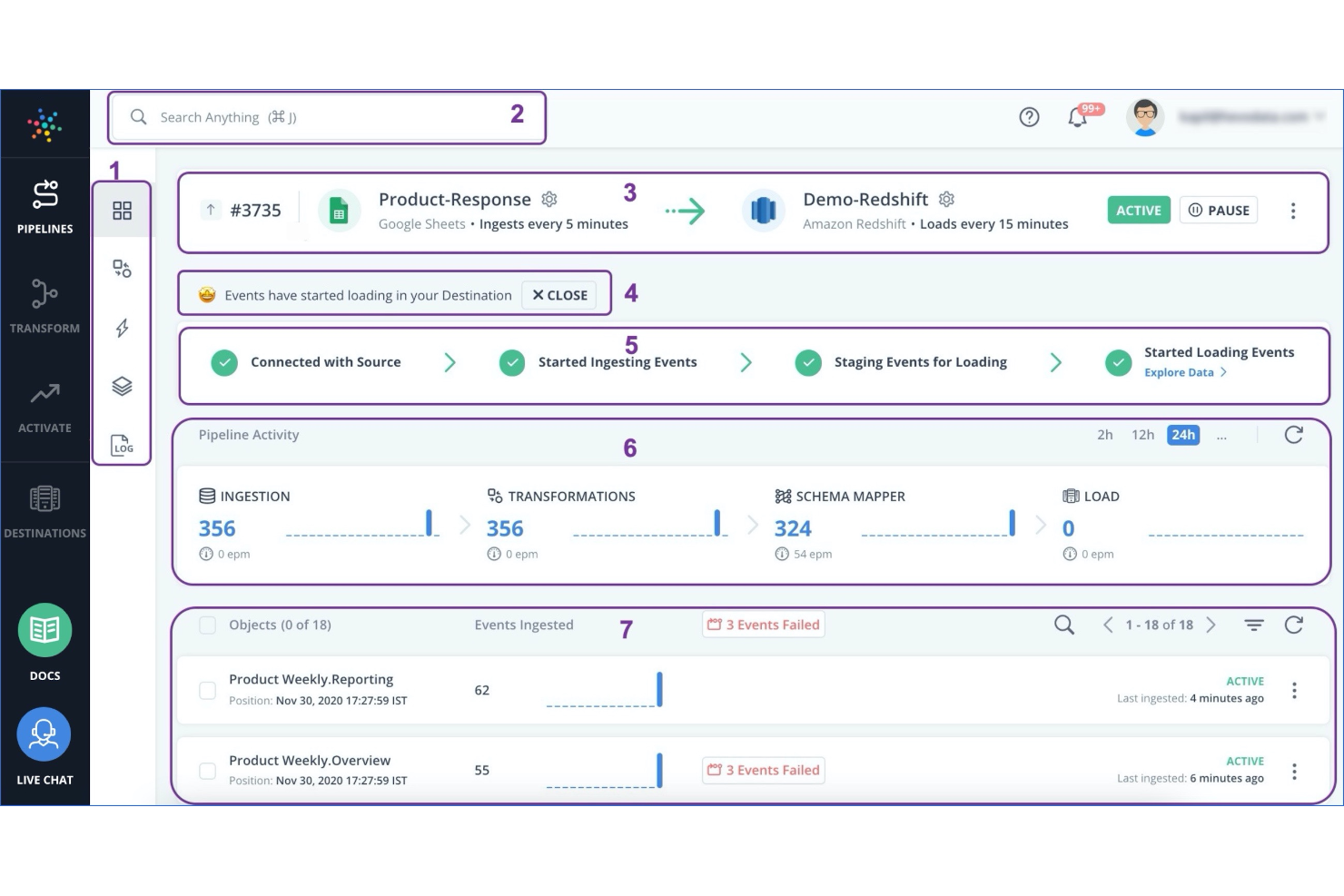

Hevo Data is an ETL and data integration platform aimed at data teams seeking reliable and automated data pipelines. It facilitates data ingestion from various sources with minimal setup and no programming, enhancing data accuracy and decision-making.

Why I picked Hevo Data: It excels in automated data integration, with features like real-time pipeline monitoring and high-speed data replication, ensuring your team stays updated with minimal effort. Hevo's compliance and security features provide peace of mind, especially for enterprise users. The platform's transparent pricing means no hidden fees, which is a big plus for budgeting. Its user-friendly interface allows you to manage data without extensive programming knowledge.

Standout features & integrations:

Features include real-time pipeline monitoring that keeps your data current, advanced management options for greater control, and compliance and security features to protect sensitive information. These features help your team work efficiently and securely. Hevo also offers high-speed data replication to ensure data consistency.

Integrations include Salesforce, Google Analytics, Amazon Redshift, Snowflake, BigQuery, MySQL, PostgreSQL, Oracle, MS SQL Server, and HubSpot.

Pros and cons

Pros:

- High-speed replication

- Real-time monitoring

- Automated data integration

Cons:

- Not suitable for all data types

- Limited customization options

KETL is an open-source ETL platform designed for data engineers and IT professionals who need scalable data integration and scheduling solutions. It provides a multi-threaded, XML-based architecture that supports complex data manipulations, making it suitable for extensive data processing tasks.

Why I picked KETL: It offers scalable ETL solutions with its ability to handle large data volumes across multiple servers and CPUs. The platform's job execution and scheduling manager ensures efficient workflow management, which is crucial for large-scale operations. Its centralized repository for job definitions helps maintain organization and control over data processes. Additionally, KETL's performance monitoring capabilities allow your team to track and optimize data workflows effectively.

Standout features & integrations:

Features include a multi-threaded architecture that enhances scalability and performance. KETL supports a wide range of job types, including SQL, OS, and XML, providing flexibility in processing different data formats. The platform's centralized repository for job definitions helps streamline workflow management and ensure consistency.

Integrations include Oracle, MySQL, PostgreSQL, Microsoft SQL Server, MongoDB, Amazon Redshift, Google BigQuery, Salesforce, SAP, and Hadoop.

Pros and cons

Pros:

- Centralized job repository

- Supports complex data manipulation

- Scalable across multiple servers

Cons:

- Documentation can be sparse

- Limited community support

Scriptella is an open-source ETL tool designed for developers and data engineers who need straightforward data integration solutions. It performs data transformations and migrations using simple scripting, making it accessible for users who prefer text-based configurations.

Why I picked Scriptella: It excels in simple ETL scripting, providing an easy-to-use solution for those who want to avoid complex configurations. Scriptella supports multiple data sources and scripting languages, allowing your team to work with what they know best. Its lightweight design ensures quick deployment and minimal overhead, which is ideal for smaller projects. The tool's focus on simplicity allows you to create and manage ETL processes without extensive training.

Standout features & integrations:

Features include support for multiple scripting languages, which lets you use your preferred syntax. The tool's lightweight design ensures it runs efficiently with minimal resources. Its straightforward configuration files make it easy to set up and manage ETL processes.

Integrations include JDBC, XML, LDAP, JNDI, Velocity, and EJB.

Pros and cons

Pros:

- Supports multiple scripting languages

- Lightweight and efficient

- Simple scripting for ETL

Cons:

- Not ideal for large datasets

- Requires scripting knowledge

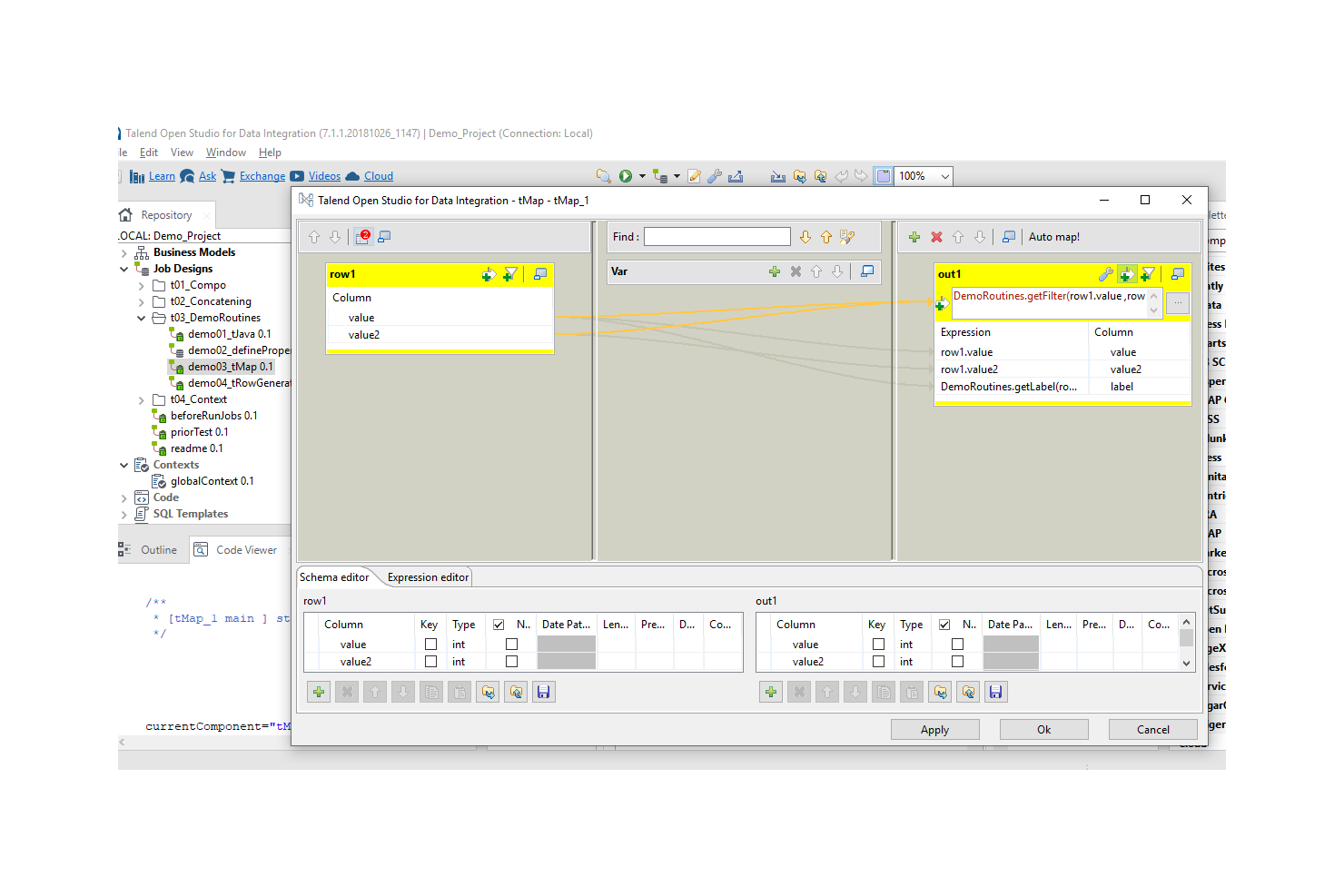

Talend Open Studio is a suite of open source tools that enables ETL developers to build basic data pipelines in less time. It features an Eclipse-based development environment and more than 900 pre-built connectors, including Oracle, Teradata, Marketo, and Microsoft SQL Server. The platform includes five components: Talend Open Studio for Data Integration, Big Data, Data Quality, Enterprise Service Bus (ESB), and Master Data Management (MDM).

Talend Open Studio is a great companion for many business intelligence (BI) tools. It provides several methods for converting multiple datasets into formats compatible with popular BI platforms, including Jasper, OLAP, and SPSS. Users can also glean insights directly from Talend Open Studio, which can generate basic visualizations, including bar charts.

Talend Open Studio supports integrations with several databases, including Microsoft SQL Server, Postgres, MySQL, Teradata, and Greenplum.

Talend Open Studio is free to download for all users.

Apache Camel is an open-source integration framework designed for developers and architects who need to implement enterprise integration patterns. It facilitates the routing and mediation of messages between systems, making it ideal for complex integration scenarios.

Why I picked Apache Camel: It excels in using integration patterns to streamline connectivity between different systems. Camel supports a wide range of protocols and data formats, ensuring your team can connect disparate systems easily. Its domain-specific language (DSL) provides a flexible way to define routing and mediation rules, which is crucial for handling complex integrations. The tool's ability to integrate with various endpoints offers unparalleled versatility in designing integration solutions.

Standout features & integrations:

Features include a rich set of enterprise integration patterns that simplify the integration process. The tool's domain-specific language allows you to define complex routing rules with ease. Apache Camel also supports a wide range of data formats and protocols, which ensures compatibility with multiple systems.

Integrations include AWS, Apache Kafka, ActiveMQ, RabbitMQ, Salesforce, Google Cloud, Azure, JMS, File, and FTP.

Pros and cons

Pros:

- Versatile endpoint integration

- Wide data format support

- Extensive integration patterns

Cons:

- Resource-intensive for large setups

- Documentation can be sparse

Apache NiFi is an open-source data integration tool designed for developers and data engineers needing to automate data flows. It enables the collection, processing, and distribution of data across different systems, making it ideal for real-time data handling.

Why I picked Apache NiFi: It's tailored for data flow automation, providing a user-friendly interface for designing complex workflows. NiFi's drag-and-drop interface simplifies the creation of data pipelines, which is crucial for teams without extensive coding experience. The tool supports real-time data flow management, ensuring your data remains current and relevant. Its built-in security features add an extra layer of protection for sensitive data.

Standout features & integrations:

Features include a drag-and-drop interface that simplifies workflow design, allowing you to create data pipelines with ease. NiFi's real-time data flow management ensures that your data is always up-to-date. The tool also offers built-in security features to protect your sensitive information.

Integrations include AWS, Azure, Google Cloud, Kafka, HDFS, MongoDB, Elasticsearch, MySQL, PostgreSQL, and JMS.

Pros and cons

Pros:

- Real-time data management

- User-friendly drag-and-drop interface

- Automates data flows efficiently

Cons:

- Initial setup complexity

- Can be resource-intensive

Other Open Source ETL Tools

Here are some additional open source ETL tools options that didn’t make it onto my shortlist, but are still worth checking out:

Open Source ETL Tool Selection Criteria

When selecting the best open source ETL tools to include in this list, I considered common buyer needs and pain points like data integration tool complexity and scalability. I also used the following framework to keep my evaluation structured and fair:

Core Functionality (25% of total score)

To be considered for inclusion in this list, each solution had to fulfill these common use cases:

- Data extraction from multiple sources

- Data transformation and cleaning

- Data loading to target systems

- Real-time data processing

- Batch data processing

Additional Standout Features (25% of total score)

To help further narrow down the competition, I also looked for unique features, such as:

- Support for complex data workflows

- Advanced data security features

- Integration with cloud services

- Customizable data connectors

- Automated error handling

Usability (10% of total score)

To get a sense of the usability of each system, I considered the following:

- Intuitive user interface

- Easy navigation

- Minimal learning curve

- Clear documentation

- Responsive design

Onboarding (10% of total score)

To evaluate the onboarding experience for each platform, I considered the following:

- Availability of training videos

- Interactive product tours

- Access to templates

- Live webinars for guidance

- Supportive chatbots

Customer Support (10% of total score)

To assess each software provider’s customer support services, I considered the following:

- 24/7 availability

- Multiple support channels

- Responsive help desk

- Comprehensive FAQs

- Access to community forums

Value For Money (10% of total score)

To evaluate the value for money of each platform, I considered the following:

- Competitive pricing tiers

- Free trial availability

- Cost versus feature set

- Scalability of pricing plans

- Discounts for long-term use

Customer Reviews (10% of total score)

To get a sense of overall customer satisfaction, I considered the following when reading customer reviews:

- Positive user feedback

- Commonly reported issues

- Consistency in feature performance

- Overall satisfaction ratings

- Trends in user complaints

How to Choose Open Source ETL Tools

It’s easy to get bogged down in long feature lists and complex pricing structures. To help you stay focused as you work through your unique software selection process, here’s a checklist of factors to keep in mind:

| Factor | What to Consider |

| Scalability | Ensure the tool can handle your data volume growth. Consider future needs and whether the tool supports both batch and real-time processing efficiently. |

| Integrations | Check if the tool integrates with your existing systems and data sources like databases, cloud services, and third-party applications to streamline workflows. |

| Customizability | Look for the ability to tailor data workflows to fit your specific processes. The more customizable, the better it can adapt to your evolving needs. |

| Ease of Use | Evaluate the user interface. A tool that’s easy to navigate will reduce the learning curve for your team and speed up implementation. |

| Budget | Compare pricing against your budget. Consider total cost of ownership, including hidden costs, to ensure it aligns with your financial constraints. |

| Security Safeguards | Ensure the tool offers robust security features to protect your sensitive data. Look for encryption, user access controls, and compliance with regulations. |

| Support | Check the availability of customer support. Responsive support can be crucial during implementation and troubleshooting. |

| Performance | Assess the tool's processing speed and reliability. It should consistently deliver data on time without errors to support your business operations. |

Trends in Open Source ETL Tools

In my research, I sourced countless product updates, press releases, and release logs from different open source ETL tools vendors. Here are some of the emerging trends I’m keeping an eye on:

- Real-time processing: More tools are focusing on real-time data processing, allowing businesses to react quickly to changes and make informed decisions. For example, Apache Kafka has enhanced its streaming capabilities to support real-time analytics.

- Data observability: Vendors are adding features to improve data visibility and monitoring, helping teams identify and resolve issues faster. Tools like Apache NiFi now offer enhanced data tracking and lineage features to ensure data integrity.

- Cloud-native architecture: With the shift to cloud computing, ETL tools are being designed to leverage cloud resources efficiently. Talend Open Studio, for example, offers cloud-native capabilities to optimize performance and scalability.

- Low-code interfaces: There's a growing demand for low-code or no-code platforms that make ETL tools accessible to non-technical users. Tools like Pentaho Kettle are adopting more visual interfaces to simplify data pipeline creation.

- Data governance: As data privacy regulations tighten, ETL tools are integrating more governance features. This includes data masking and encryption options, which are becoming standard in solutions like Hevo Data to ensure compliance and secure data handling.

What Are Open Source ETL Tools?

Open source ETL tools are software solutions that facilitate the extraction, transformation, and loading of data from various sources into a centralized location. Data engineers, analysts, and IT professionals typically use these tools to efficiently manage and process large volumes of data. Real-time processing, data observability, and cloud-native capabilities help with quick decision-making, issue resolution, and efficient resource use. Overall, these tools provide the flexibility and scalability needed to handle complex data workflows and support data-driven strategies.

Features of Open Source ETL Tools

When selecting open source ETL tools, keep an eye out for the following key features:

- Real-time processing: Processes data as it comes in, helping you make timely decisions and react quickly to changes.

- Data observability: Provides visibility into data flows, allowing you to monitor and resolve issues swiftly.

- Cloud-native architecture: Utilizes cloud resources efficiently to enhance scalability and performance.

- Low-code interfaces: Simplifies the creation of data pipelines, making tools accessible to non-technical users.

- Data governance: Ensures compliance and security through features like data masking and encryption.

- Multi-source integration: Connects with various data sources to centralize and streamline data processing.

- Scalability: Handles growing data volumes and supports both batch and real-time processing.

- Customizability: Allows tailoring of data workflows to fit specific business needs and processes.

- Performance monitoring: Tracks and optimizes data workflows to maintain efficiency and accuracy.

- Scheduling manager: Automates task execution and manages workflows to improve productivity.

Benefits of Open Source ETL Tools

Implementing open source ETL tools provides several benefits for your team and your business. Here are a few you can look forward to:

- Cost efficiency: Being open-source, these tools often come with no licensing fees, reducing overall costs for your business.

- Flexibility: Customizable workflows allow you to tailor data processes to fit your specific needs and adapt as those needs evolve.

- Scalability: Supports both batch and real-time processing, enabling your business to handle growing data volumes without sacrificing performance.

- Improved decision-making: Real-time data processing ensures that your team has access to up-to-date information, allowing for timely and informed decisions.

- Enhanced data quality: Features like data observability and governance help maintain data accuracy and compliance, increasing trust in your data.

- Community support: A strong community of developers often supports these tools, providing resources and shared knowledge for troubleshooting and enhancements.

- Integration capabilities: Connects easily with various data sources and systems, streamlining data management across your organization.

Costs and Pricing of Open Source ETL Tools

Selecting open source ETL tools requires an understanding of the various pricing models and plans available. Costs vary based on features, team size, add-ons, and more. The table below summarizes common plans, their average prices, and typical features included in open source ETL tools solutions:

Plan Comparison Table for Open Source ETL Tools

| Plan Type | Average Price | Common Features |

| Free Plan | $0 | Basic data extraction, limited integrations, and community support. |

| Personal Plan | $5-$25/user/month | Enhanced data transformations, personal support, and limited customization. |

| Business Plan | $50-$100/user/month | Advanced data processing, multiple integrations, and team collaboration tools. |

| Enterprise Plan | $100-$500/user/month | Full customization, enterprise-level support, and comprehensive security features. |

Open Source ETL Tools (FAQs)

Here are some answers to common questions about Open Source ETL Tools:

What are the limitations of ETL tools?

ETL tools often don’t store data permanently, which means you need additional storage solutions. They can also introduce data latency, causing delays in data availability. The learning curve can be steep, and scaling the tools to handle large data volumes may require extra resources. Furthermore, they might struggle with unstructured data.

Which open source ETL tool is best?

The best open source ETL tool depends on your specific needs. Tools like Apache NiFi are great for real-time data flow, while Talend Open Studio is excellent for broad data integration tasks. Consider factors like your team’s expertise, data complexity, and integration needs when choosing.

What can advanced ETL tools load and convert structured and unstructured data into?

Advanced ETL tools can load and convert both structured and unstructured data into formats compatible with systems like Hadoop. They handle multiple files in parallel, simplifying the process of merging diverse data into a unified transformation workflow.

What is the difference between API and ETL tools?

APIs are ideal for real-time data exchange and communication between applications. In contrast, ETL tools are better suited for batch processing tasks, where data from multiple sources needs to be consolidated, transformed, and loaded into a target system for analysis.

How do ETL tools handle data security?

ETL tools handle data security by implementing encryption, access controls, and compliance measures. They ensure that sensitive data is protected during extraction, transformation, and loading processes. Some tools also offer built-in auditing features to track data access and modifications.

Can ETL tools integrate with cloud services?

Yes, many ETL tools integrate seamlessly with cloud services. They support data movement to and from cloud platforms like AWS, Google Cloud, and Azure, enabling you to leverage cloud storage and processing capabilities for your data workflows.

What's Next?

Boost your SaaS growth and leadership skills. Subscribe to our newsletter for the latest insights from CTOs and aspiring tech leaders.

We'll help you scale smarter and lead stronger with guides, resources, and strategies from top experts!