The use of artificial intelligence (AI) in test automation is the latest trend in quality assurance. Testing, in general, and test automation, in particular, seems to have caught the “everything's better with AI” bug.

Since AI, machine learning, and neural networks are the hottest thing right now, it is perhaps inevitable that AI would find its way into test automation somehow.

A Background in Test Automation

I recall a time several years ago, back when test automation was still new, when one of my quality assurance teams was working on a project for a large customer. It was a mobile application with millions of users and monthly release cycles. The QA team was usually relaxed during the first two weeks of the cycle and working frantically thereafter till release—one of the peculiar side effects of agile software development that you don’t read about in the headlines!

Finally, one of the QA leads, fed up with twiddling his thumbs during the lean two weeks, started working on a framework for test automation. He wrote up some test scripts in Ruby with Selenium / Appium and Jenkins for a rudimentary pipeline, including some pretty reports with Red / Yellow / Green indicators for test failure/success.

He successfully did this for a couple of release cycles. That’s when we presented it to our Quality Chief, who pitched it to our customers and got them excited enough to pay for automated testing as a sub-project. We were on cloud nine, on the bleeding edge of things!

Bleeding edge took on a new meaning, however, in a few months when we discovered that fundamental truth about test automation that any QA automation engineer worth their salt wrestles with today:

Why am I saying this? Because pretty soon, we were feeling like Alice in Mordor-land running like crazy with the Red Queen:

We had just discovered the cold reality of the test-automation trap: one of our QA engineers had to keep the automation suite up-to-date with the application almost every release cycle because the changes were coming in fast and thick!

One field name change and our automation software tests were down the proverbial rabbit hole. The start of a new release was especially troublesome as the software development team made tons of new additions.

Test automation has not fundamentally changed since then because it still requires continuous monitoring and maintenance. Any possibility for dramatic improvement in the approach and implementation has only appeared with the recent advancement in the capabilities of AI.

AI Hype vs Reality

AI technology in its current avatar is mostly about using machine learning algorithms to train models with large-ish volumes of data and then using the trained models to make predictions or generate some desired output. Almost all of AI fits into this admittedly oversimplified description. As far as we are concerned, though, the major question here is:

Will AI actually be able to help automatically generate and update test cases? Find bugs? Improve code coverage?

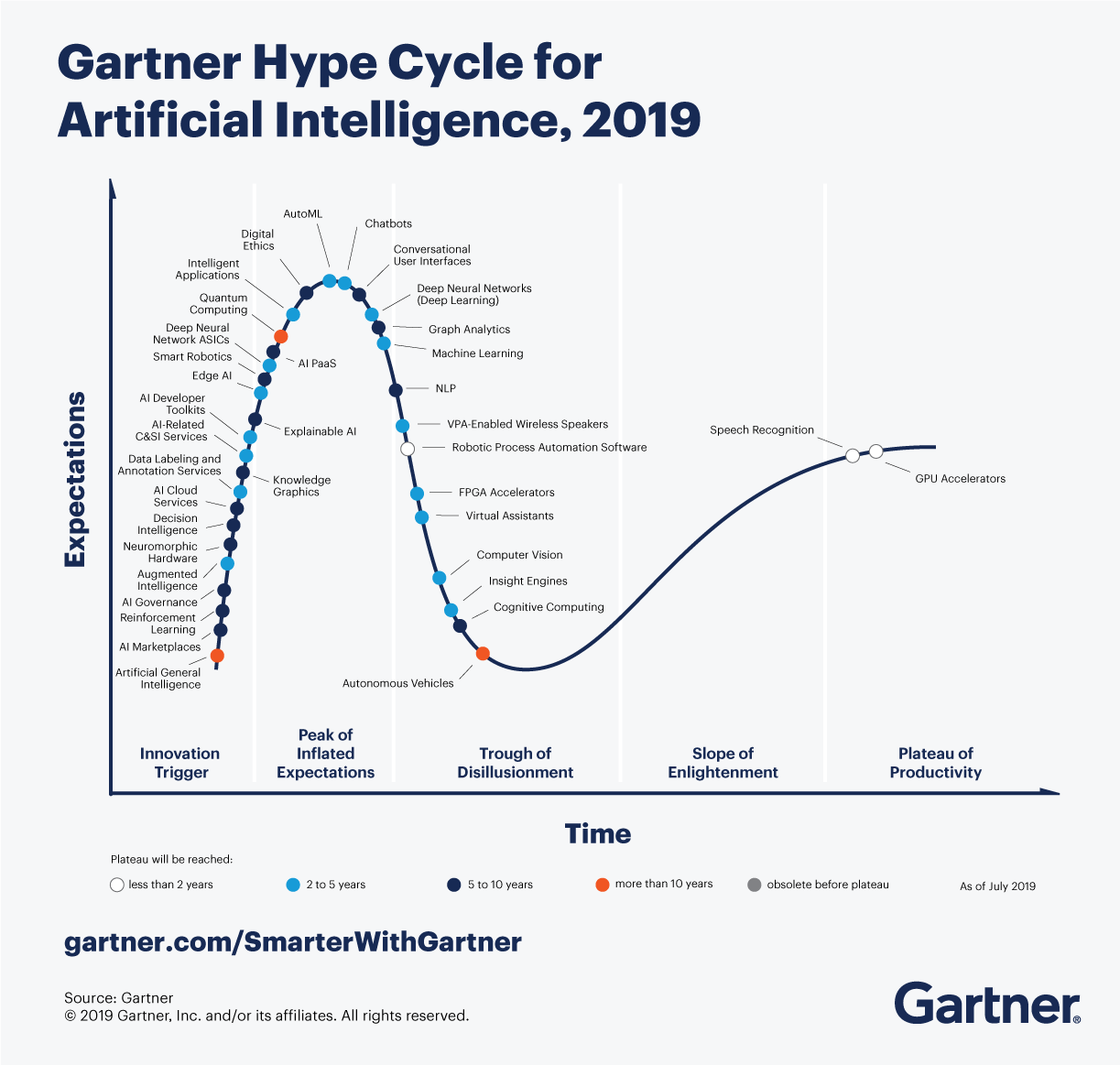

The answer to that question is far from clear right now because we’re at the peak of the hype cycle for AI. A specific sub-field, deep learning, has caused a lot of this excitement.

What I find most interesting about this graph is this:

If Siri is supposed to be past the hype curve, then we’re a long way from Kansas, Dorothy!

I mean, I know many who have to repeat themselves to get Siri to understand what they’re saying (no offense meant, Siri fans. Seriously 😉).

The hype curve tells us that there is a lot of excitement about the potential of AI, which cools off before everyone gets into the bread-and-butter, business-as-usual mode. In simple terms, what Gartner’s fancy graph is saying is this:

However, while we’re a long way from the singularity, AI in its current avatar still has the potential to significantly improve test automation.

How Does Machine Learning Produce Automated Tests?

- Training: In the training phase, the machine learning model needs to be trained on a specific organizational dataset, including the codebase, application interface, logs, test cases, and even specification documents. Not having a sufficiently large training dataset can reduce the efficacy of the algorithm.

Some tools have pre-trained models that are updated through continuous learning for specific applications like UI testing so that generalized learning can be used in a specific organization. - Output / Result generation: Depending on the use case, the model generates test cases, checks existing test cases for code coverage, completeness, accuracy, and even performs tests. In all cases, a tester needs to check the output generated to get validation and ensure that it is usable.

If we use the analogy of self-driving cars, the results are more like driving assistance than an actual driverless car. - Continuous improvement: As an organization keeps using the tool regularly, the training data keeps increasing, thereby potentially increasing the accuracy and effectiveness of existing trained networks. In short, the AI system keeps learning and improving.

Machine learning algorithms are revolutionizing test automation. To find tools that incorporate these advanced algorithms, explore our guide to top-tier software testing tools

Applications Of AI In Test Automation

Let's take a closer look at some applications of AI in test automation including unit testing, user interface testing, API testing, and maintaining an automation test suite.

Creating And Updating Unit Tests

Unit testing, often used as part of continuous testing, continuous integration / continuous delivery (CI / CD) in DevOps, can be a real pain in the… asteroid belt.

Typically, developers spend significant amounts of time authoring and maintaining unit tests, which is nowhere near as much fun as writing application code. In this instance, AI-based products for automated unit test creation can be useful, especially for those organizations that plan to introduce unit tests late in the product life cycle.

Benefits:

- AI-based automated unit tests are a significant step ahead of template-based automated unit test generation using static or dynamic analysis. The tests so generated are actual code, not just stubs.

- AI-based unit tests can be generated very quickly, which is useful for a large existing codebase.

- Developers just need to modify tests and can set up the unit regression suite relatively quickly.

Limitations:

- AI-generated unit tests simply mirror the code on which they are built. They cannot as yet guess the intended functionality of the code. If the code does not behave as intended, the unit test generated for that code will reflect that unintended behavior.

This is a significant minus because the whole point of unit tests is to enforce and verify an implicit or explicit contract. - Unit tests generated using machine learning can break existing working unit tests, and it is up to a developer to ensure that this does not happen.

- Developers need to write tests for complex business logic themselves.

Automated User Interface Testing

This is an area where AI is beginning to shine. In AI-based UI testing, test automation tools parse the DOM and related code to ascertain object properties. They also use image recognition techniques to navigate through the application and verify UI objects and elements visually to create UI tests.

Additionally, AI test systems use exploratory testing to find bugs or variations in the application UI and generate screenshots for later verification by a QA engineer. Similarly, the visual aspects of the System Under Test (SUT) such as layout, size, and color can be verified.

Benefits:

- Automated UI testing can lead to increased code coverage.

- Minor deviations in the UI do not cause the test suite to fail. Product AI models can handle these.

Limitations:

- For any modern application, the number of platforms, app versions, and browser versions is large. It is not clear how well AI-based UI automation performs under these conditions. However, cloud testing tools can execute tests in parallel, so this is going to be an interesting space to watch!

Using AI To Assist In API Testing

Even without AI, automating API testing is a non-trivial task since it involves understanding the API and then setting up tests for a multitude of scenarios to ensure depth and breadth of coverage.

Current API test automation tools, like Tricentis and SoapUI, record API activities and traffic to analyze and create tests. However, modifying and updating tests require testers to delve into the minutiae of REST calls and parameters, and then update the API test suite.

AI-based API automation testing tools attempt to mitigate this problem by examining traffic and identifying patterns and connections between API calls, effectively grouping them by scenario. Tools also use existing tests to learn about relationships between APIs, use these to understand changes in APIs, and update existing tests or create new scenario-based tests.

Benefits:

- For novice testers or those without programming experience, this could be really helpful to get them to “hit the ground running”.

Once again, change management would be significantly easier given that at least some of the API changes can be handled by an AI automation tool.

Limitations:

- In general, API testing is difficult to set up, and not many tools offer machine learning based capabilities in this space. The ones that do seem to have pretty rudimentary capabilities.

Automation Test Maintenance

AI-based tools can evaluate changes to the code and fix several existing tests that don't align with those changes, especially if those code changes are not too complex. Updates to UI elements, field names, and the like need not break the test suite anymore.

Some AI tools monitor running tests and try out modified variants for failed tests by choosing UI elements based on the best fit. They can also verify test coverage and supplement the gaps if needed.

AI-Based Test Data Generation

Test data generation is another promising area for AI models. Machine learning can easily generate data sets, like personal profile photographs and information like age and weight, based on trained machine learning models using existing production datasets to learn.

In this way, the test data generated is very similar to production data which is ideal for use in software testing. The machine learning model that generates data is called a Generative Adversarial Network (GAN).

Test Automation Tools That Use Machine Learning

Check out the tools before if you're looking for software that uses machine learning to conduct and track automated tests. Many include open source or codeless options to meet the needs of your testing team.

Conclusion

Artificial intelligence has significantly impacted testing tools and methods, and test automation in particular. An overview of the current tools promising AI shows that, while many new features are being added, several of those features are still on their way to maturity.

If you found this article helpful, please subscribe to The QA Lead Newsletter and stay up to date with all the latest updates in the world of testing.