On average, organizations deal with 400 data sources regularly. With literally hundreds of datasets to contend with, data integration and accurate data analysis can be impossible without the right workflows.

In this guide, I'll introduce you to ETL workflows and provide tips for building ETL data pipelines that support enhanced functionality and data quality.

What are ETL Workflows?

Our ultimate guide to ETL explains the specifics of the ETL process, including extract, transform, and load processes. For optimal functionality, these processes must work together so that information flows efficiently from the data source to data warehousing.

ETL workflows are responsible for this overall process. They typically involve various API tools, connectors, and data processing steps to ensure the extract, transform, and load process works well and supports organizational needs.

Benefits of ETL Workflows

Some benefits of strong ETL workflows include:

- Enhanced data access. With the right ETL processes, you can support real-time data access for employees. The extract, transform, and load process ensures that data is ready for use once it hits data storage, making it easier to run SQL reports, data models, and other queries.

- Ability to handle big data. Advanced ETL processes can tackle large data sets and integrate unstructured data without time-consuming manual processes. This makes it easier for organizations to manage and benefit from big data.

- Better data transformation processes. The proper ETL workflow integrates elements such as data scrubbing and transformation to enhance the conversion of raw data into structured data sets you can use.

- Support for efficiency via automation. Importing source data, running validation processes, and other tasks can be handled by ETL tools that support automation, reducing the time it takes to manage data.

- Ability to apply business rules within data management processes. You can customize ETL solutions to meet various organizational needs, including integrating business and compliance rules into the extract, transform, and load workflow.

ETL Process Overview

Before discussing the key components of ETL workflows and how to model them, let’s first examine how these processes work, including the goals and challenges of each step.

Extract

During the extract part of ETL, information is gathered from one or more data sources. Data extraction may encounter raw data as well as previously processed data, and you may deal with various file types, including CSV and XML.

- Goal: To capture the most holistic, comprehensive data possible.

- Challenges: Identifying data lakes and sources, gaining appropriate access to data, and timing data gathering to ensure up-to-date processes.

Transform

The data transformation process involves converting data to formats that work with the relational databases and other data storage and analysis methods you want to use. During this step of ETL, you may consider tools to conduct data mapping and cleansing, deduplicating, formatting, adding metadata, and other tasks—both via automation and manual workflows.

- Goal: Clean, usable data that is formatted to work within your target system or data store.

- Challenges: Data quality is a common challenge organizations face when transforming data; the lower the quality, the more data transformation work is required. Other challenges include potential data loss, integrating multiple data types, and ensuring security throughout the process.

Load

In the final step of ETL processes, you load data into the final data warehouse, making it available for use as needed by the organization.

- Goal: To ensure secure, clean data is ready for use in analytics or by CRM systems or other business software.

- Challenges: Ensuring data quality and security are also challenges at this step, and organizations must create data governance processes to define who can access data and how they do it. Supporting real-time—or as close to it as possible—access to data may also be important, but batch processing can cause time lags in the process.

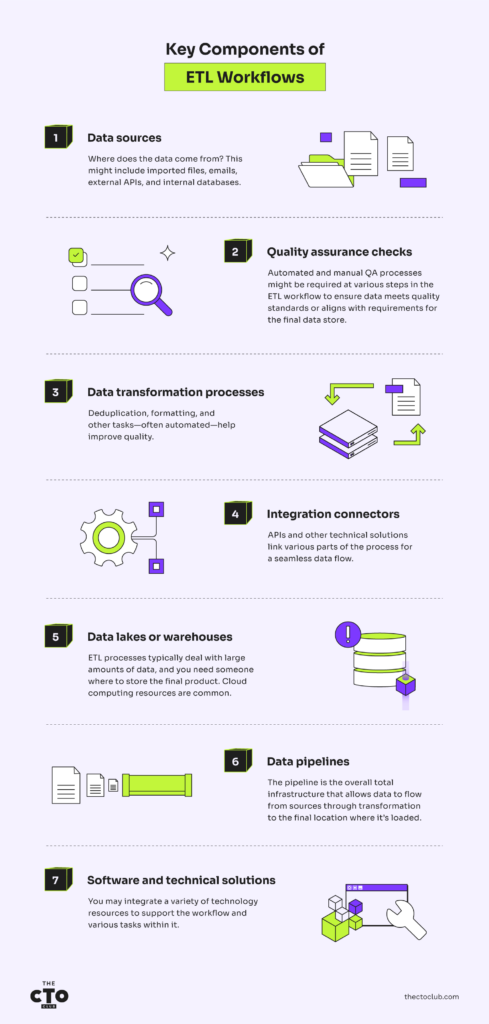

Key Components of ETL Workflows

The first step in building ETL workflows is to consider the key components that should be included. Use cases for these workflows vary, and you may need to consider unique data and process dependencies for your organization.

However, some components data engineers commonly consider for ETL workflows include:

- Data sources. Where does the data come from? This might include imported files, emails, external APIs, and internal databases.

- Quality assurance checks. Automated and manual QA processes might be required at various steps in the ETL workflow to ensure data meets quality standards or aligns with requirements for the final data store.

- Data transformation processes. Deduplication, formatting, and other tasks—often automated—help improve quality.

- Integration connectors. APIs and other technical solutions link various parts of the process for a seamless data flow.

- Data lakes or warehouses. ETL processes typically deal with large amounts of data, and you need someone where to store the final product. Cloud computing resources are common.

- Data pipelines. The pipeline is the overall total infrastructure that allows data to flow from sources through transformation to the final location where it’s loaded.

- Software and technical solutions. You may integrate a variety of technology resources to support the workflow and various tasks within it.

Modeling ETL Workflows

When modeling your ETL pipelines, you must consider whether you want to process via batch or stream.

Building an ETL Pipeline With Batch Processing

With batch processing, the workflow manages data in batches or chunks. Each batch is typically predetermined by time—you might batch daily, periodically through the day, or at the top of each hour, for example.

Batch processing is a good solution when:

- You want to leverage economies of scale

- You have automation in place that can handle large batches of data quickly

- Data access and processing is not highly time-sensitive

Building an ETL Pipeline With Stream Processing

Stream processing allows data to flow through ETL in real-time without building up batches of data at any step in the process. It’s more flexible than batch processing, but it can be more difficult to ensure data quality and consistency with this type of pipeline.

Stream processing is a good solution when:

- Data enters your process in small chunks and at inconsistent intervals

- Access to data is time-sensitive, making it critical to get data into the process immediately

- You want access to trending insights without waiting for batched data to be processed

ETL Workflows Examples

Understanding your industry's specific data flow and management needs is critical to successfully planning for ETL workflows. Consider these examples from different industries to understand how requirements and dependencies might vary.

ETL for Healthcare Data Flows

Healthcare organizations require accurate, timely access to patient and treatment data, making ETL processes essential to success. Data can come from a variety of sources, including patient portals, electronic health records, imaging equipment, and third-party vendors such as pharmaceutical companies.

One of the biggest challenges for healthcare ETL is creating secure processes that comply with regulations such as HIPAA. These processes also depend on data sources fed by patients, providers, and automation, creating extra challenges in the data transformation process.

When modeling healthcare data pipelines, you may need to use stream and batch processing. For example, providers may need real-time access to diagnostic data, while coding and billing data can be batched at the end of the day for claims purposes.

ETL for E-Commerce Data Flows

E-commerce data comes from many sources, including emails, customer portals, APIs and apps, social media, websites, CRM systems, and accounting or payment processes. This creates an enormous challenge in transforming data without degrading it.

Batch processing typically works well for many e-commerce processes. For example, you might grab order data every hour and feed it into ETL processes that support warehouse and shipping tasks. You can also scrape payment data daily so that financial records are updated.

However, if you want to support 24/7 self-service for customers, stream-based workflows might be more appropriate. For instance, say you have a chatbot that provides customers with information about orders, payments, returns, and credits. If your ETL processes run on daily batches, the information your chatbot provides is always at least 24 hours out of date.

ETL for Financial Data Flows

Banks, credit card companies, money-management apps, and other organizations and services in the finance sector rely heavily on accurate data. These organizations must also contend with heavy regulations and compliance standards in keeping data secure.

Data sources can include imported data from other financial organizations, ACH and clearinghouse data, information from customer-facing apps, and less structured formats like emails. Financial organizations may also want to use a combination of batch and stream pipelines to meet customer needs.

Technological Advancements in ETL Processes

Several decades ago, cloud technology revolutionized data processing, and it's still a huge component of advancements in data warehouse software and solutions. However, currently, trends in ETL technology advancement tend to focus on integrating AI, machine learning, and automation into workflows. Some specific advancements include:

- AI's ability to deal with Big Data. Artificial intelligence tools can process enormous amounts of data in seconds, creating support for the scalability of ETL processes that haven't existed before.

- Natural language processing. Machine learning and natural language processing allow automated solutions to approach data transformation with a more comprehensive set of "critical thinking" skills than was previously possible. This creates opportunities for automating tasks that historically required human intervention.

- Support for data governance. Almost every industry today is dealing with enhanced regulatory requirements, and technology solutions increasingly help support compliance and the security of data.

Best Practices for Optimizing ETL Workflows

What works best for another organization may not be the best practice for your business when it comes to ETL. However, I've gathered some general best practices you can implement to optimize ETL workflows in almost any environment.

- Choose the right tools for your needs. Do some research on ETL workflow and process tools to find partners and solutions that meet your unique data needs. Consider putting a team together to map desired ETL workflows, list challenges you face, and evaluate potential solutions in light of those factors.

- Make sure your workflow choices are correct. Take time to understand ETL versus ELT and whether you need batch or stream workflows.

- Monitor your processes regularly. Use tools such as SQL Server Performance Monitor—or other relevant performance monitors—to understand how your ETL workflows perform. Keep an eye on efficiency and quality metrics, and make tweaks as needed for continuous improvement.

- Integrate technology that supports quality and scale. Consider options such as parallel computing and data compression to optimize your workflows.

Takeaways

The design of your ETL workflows can make or break your data processes. Take time to consider the implications of workflow decisions, map out ideal workflows, and choose the right tools to support data extraction, transformation, and loading.

Keep up with technology and data best practices by subscribing to the CTO Club newsletter.